Is there a magic recipe to implement AI efficiently in today's ever-changing business world? According to Rachel Alexander, Founder and CEO at Omina Technologies, companies must be aware of their crucial role in driving artificial intelligence by having a concrete AI strategy that takes into account fairness, explainability, and bias in their data

AI IEN: Could you tell us a few words about you and Omina Technologies?

R. Alexander: I founded Omina Technologies in Belgium in 2016. The idea was to create an AI company for small and big companies, which incorporates the principles of Ethical Artificial Intelligence to make sure that AI is fair, explainable and that it investigates inherent bias in the data. Those were my three main concerns. At that time, the US elections were still ongoing, and people didn't understand the consequences of non-ethical artificial intelligence. At the same time, in 2016 and 2017, I traveled around Europe, giving talks about fair and trustworthy AI. Looking at the market, I noticed that Belgium was quite ready for AI, but something worried me. Mainly big companies were doing AI. That's how it all started.

AI IEN: How has the company evolved during these years?

R. Alexander: Today, there's so much going on around artificial intelligence, and we have grown fast during these four years. In 2016 we were a small start-up, and now we are 20 people just in Belgium and we have employees worldwide.

We started as a research and development company focused on creating ethical, explainable, and fair AI. We soon understood the importance of education in this field and we established a consultancy service called Omina AI Readiness Assessment, helping our clients to identify a competitive AI strategy. For today's businesses, it is fundamental to match their AI strategy with their company's strategy. For example, you will use AI in a particular way to improve operational efficiency. While if you seek to add value to your services and products or even bring an utterly new offering to the market, then different artificial intelligence approaches are required.

We noticed with our consultancy that many companies are getting frustrated now because they feel that AI has not been implemented strategically. This makes it harder to integrate AI into production. It is here that we step in. With our methodology and platform, we can efficiently implement AI and create acceptance within the company. Once you have aligned AI strategically, you want to ensure that it is fair, that you mitigated bias in the data, and that it is explainable. AI makes important decisions and predictions. If you can't explain why then your business won't accept it.

AI IEN: How beneficial is it to be based in Brussels, close to the EU institutions, for your job?

R. Alexander: It is certainly very important. We have been involved with the EU working groups from the beginning. Already at the end of 2016, I was committed to explaining to the Belgian government how important it is to have a trustworthy, ethical, and explainable AI. So we are very well positioned here in Brussels and in Antwerp to affect change in that matter.

Thanks to this dissemination work, companies, governments, and legislators are understanding the problem. The questions are currently getting more practical – "What do we do now?" or "How do we take the next steps to make sure to have a fast return on investments while being ethical?." Answering those questions is our expertise. We want to be pragmatic and talk to the business in business language.

AI IEN: How relevant is the role of small companies in shaping AI?

R. Alexander: The idea that only big companies can do AI is not true. Small and medium-sized companies can leverage its potential as well. We believe in giving AI as an answer to all different businesses. We have built this very powerful platform that enables companies to do artificial intelligence themselves. They don't have to get an army of specialized scientists to get started with AI anymore, but they can work directly with Omina's AI Platform, knowing that it is an inherently fair system that integrates trustworthy artificial intelligence into production.

That's how companies can get into production fast with AI. If they miss any of these steps – whether it is the strategy or mitigating inherent bias in the data, if they don't understand the use case and what they really want, it will be hard for them to drive artificial intelligence as they should do. In the end, all revolves around what companies are trying to achieve from a business perspective.

AI IEN: Do companies have a clearer idea of what they want now?

R. Alexander: The maturity level has improved quite a lot. In 2015 and 2016 companies really didn't understand AI, so I spent most of my time explaining what AI is and what our company can do. I believe there is a lot of understanding of the potential of AI now. At the same time, there is growing frustration within companies. They see this proof of concept springing up within their company without a return on investments.

It is difficult for businesses to understand why they are not getting results given that AI is supposed to be one of the most powerful technologies of the future. That is when they come to us. Thanks to our agile methodology, they can see results very quickly.

AI IEN: Could you tell me more about the platform that you have developed?

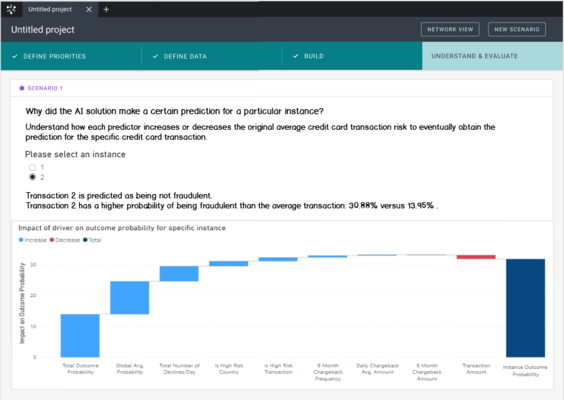

R. Alexander: This idea that the business should drive artificial intelligence is the cornerstone of our system. If you look at the different cases and you want to know, for instance, which customers are most likely to leave the company, you need to have clear goals. Do you want to know every single customer potentially at risk or just the most valuable ones? Or maybe you want to understand why your customers might be at risk – and that is the explainability part. There are different ways to go about that. Each business goal requires a different AI solution design, so a different approach. We built this platform to enable the business to put in its constraints and goals directly and get production-ready algorithms very quickly. It provides a sort of "recipe" for quick implementation.

Besides, business people know better than anyone else if there is any bias within the data. Typical cases occur when AI is used to look for the best CEO. Looking purely at historical data would discriminate against women, as there are not that many women CEO. It is essential to be careful when talking about legislation. For instance, there are anti-discriminatory laws around giving loans. The same applies to hiring/firing decisions and compliance with GDPR, to mention other examples. That is why considering the potential inherent bias in your data while including explainability is so crucial if you don't want to get into trouble or have liability issues. AI must be fair, explainable, and unbiased to deliver concrete business results.

AI IEN: How does your platform guarantee ethical AI?

R. Alexander: From a technical perspective, our platform asks businesses if they know about their data's inherent bias. But it could be that they are not aware of them. In this case, we have the ability to scan the data, pick up any bias, and feed that back to the business, highlighting the potential risks and conflict with existing legislation. By specifying various scenarios in the software, companies can pro-actively assess the impact of having different priorities or changing how they want to trade-off competing priorities on the generated AI solution. For example, a scenario where solutions are prioritized based on predictive performance, interpretability and group fairness, where the solution is optimized for group fairness potentially at the expense of lower predictive performance and is optimized for predictive performance potentially at the expense of lower interpretability, means using a different AI solution design than a scenario with the same priorities but where the user wants to optimize the solution for predictive performance potentially at the expense of group fairness. Afterwards it is easier to justify the implemented AI solution to various stakeholders by referring to the alternative prioritization scenarios that have been compared.

Another critical point is that our platform supports a proactive non-discrimination approach. It is not sufficient to react to unfair situations, but it is vital to detect and mitigate the bias by doing proactively specific steps in the AI pipeline to limit vices applying model bias mitigation actively. This methodology gives companies extra objectives, such as investing in predictive performance and optimizing simultaneously for, for instance, gender non-discriminations. A non-discrimination approach should not be an afterthought, but it should be adopted from the beginning and implemented as an AI design constraint.

Sara Ibrahim