Mention 'Big Data' and most people think immediately about retailers storing customer information, the rapid rise of social media and vast numbers of financial transactions taking place online. However, engineers and scientists in test and measurement environments are contending with even bigger volumes of big data. This is data of a different kind. It is the biggest, fastest and oldest big data and it demands its own classification.

National Instruments coined the term 'Big Analog Data' to describe the hugely valuable data that is drawn from a wide range of physical parameters including vibration, temperature, acceleration, pressure, sound, image, RF signals, magnetism, light and voltage.

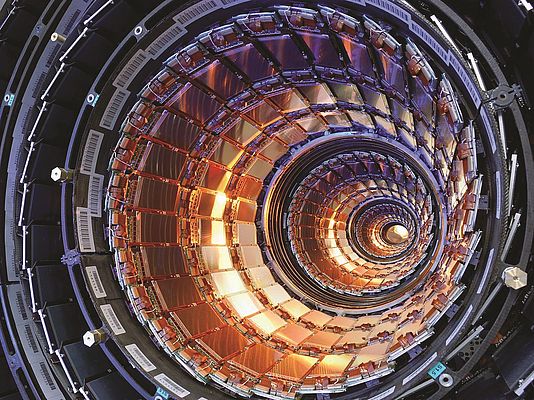

One of the best examples of Big Analog Data in Europe can be found at the CERN Large Hadron Collider. When an experiment is running, the instrumentation can generate 40 terabytes of data every second.

However, it is not only in research that such data is generated because similar information is gathered in operation too. During a single journey across the Atlantic Ocean, a four-engine jumbo jet can create 640 terabytes of data.

In 2011, a total of 1.8 zetabytes of data were created worldwide. These numbers would be astounding enough on their own, but in fact they are doubling every two years, according to a calculation by the technology research firm IDC in 2011. At this rate, the current growth of big data corresponds to one of the most famous laws in electronics, Moore's Law, which states that the number of transistors on an integrated circuit doubles approximately every two years.

Moore's Law was introduced in 1965 and even though it was based on a prediction for the following decade, it remains highly relevant today. Yet when IEN Europe was founded 40 years ago, the first use of the term 'big data' was still 23 years away, after the digital storage of data was recognised as being more cost-effective than storing data on paper.

While big data grows exponentially, so too does Big Analog Data, which is the biggest of big data.

However, volume is only one attribute of big data. To truly get to grips with Big Analog Data we need to understand the four Vs - volume, variety, velocity and value. An additional V, this time denoting visibility, is also emerging as a key characteristic. In globalised operations, there is a growing need to share data, whether it is business, engineering or scientific data. For example, data acquired from instrumented agricultural equipment in a rural Midwestern field in the US may undergo analysis by data scientists in Europe. As another example, product test engineers in manufacturing facilities in South America and China may need to access each other's data to conduct comparative analysis. The solution is for DAQ systems to be linked with IT that operates in the cloud, for instance.

Big Analog Data is generated from the environment, nature, people and electrical and mechanical machines. It is not only the biggest of big data, being generated from both natural and manmade source, it is also the fastest. This is because analog signals are generally continuous waveforms that require digitising at rates as fast as tens of gigahertz, often at large bit widths.

According to IBM, a large proportion of today's big data is from the environment, including images, light, sound and even radio signals. For example, the analog data collected by the Square Kilometre Array (SKA) in deep space is expected to be 10 times that of global internet traffic.

Drawing accurate and meaningful conclusions from such high-speed and high-volume analog data is a growing problem. This data adds new challenges to data analysis, search, data integration, reporting and system maintenance - and these challenges must be met in order to keep pace with the exponential growth of data. Solutions for capturing, analysing and sharing Big Analog Data must address both conventional big data issues and the unique difficulties of managing analog data. To cope with these challenges - and to harness the value in analog data sources - engineers are seeking end-to-end solutions.

Specifically, engineers are looking for three-tier solution architectures to create a single integrated solution that adds insight from the real-time capture at the sensors to the analytics at the back-end IT infrastructures. The data flow starts in tier one at the sensor and is captured in the system nodes of tier two. These nodes perform the initial, real-time, in-motion and early-life data analysis. Information that is deemed important flows across 'The Edge' to the traditional IT equipment that makes up tier three. Within this infrastructure, servers, storage and networking equipment allow users to manage, organise and further analyse the early-life or at-rest data. Finally, data is archived for later use.

Through the stages of data flow, the growing field of big data analytics is generating insights that have never been seen before. For example, real-time analytics are needed to determine the immediate response of a precision motion control system. At the other end, at-rest data can be retrieved for analysis against newer in-motion data, for example, to gain insight into the seasonal behaviour of a power-generating turbine.

Throughout tiers two and three, data visualisation products and technologies help realise the benefits of the acquired information.

Considering that Big Analog Data solutions typically involve many DAQ channels connected to many system nodes, the capabilities of reliability, availability, serviceability and manageability (RASM) are becoming more important.

In general, RASM expressed the robustness of a system related to how well it performs its intended function. Therefore, the RASM characteristics of a system are crucial to the quality of the mission for which the system is deployed. This has a great impact on both technical and business outcomes. For example, RASM functions can aid in establishing when preventative maintenance or replacement should take place. This, in turn, can effectively convert a surprise or unplanned outage into a manageable, planned outage, and thus maintain smoother service delivery and increase business continuity.

The oldest, fastest and biggest big data - Big Analog Data - harbours great scientific, engineering and business insight. To tap this vast resource, developers are turning to solutions powered by tools and platforms that integrate well with each other and wide range of partners. This three-tier Big Analog Data solution is growing in demand as it solves problems in key application areas such as scientific research and product test.