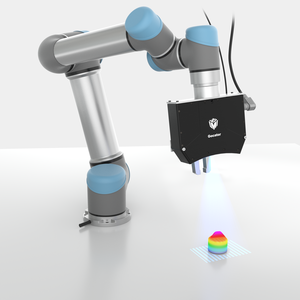

Having integrated its 3D snapshot sensor into UR cobots, the Canadian company LMI Technologies is currently working to simplify robotic integration and set the standard for successful factory automation.

IEN Europe: The use of robots in the manufacturing industry is massively growing everywhere. What new challenges is factory automation bringing to manufacturing companies? And what does it change for end users?

T. Arden: Integrating robots into a manufacturing line challenges process control engineers to rethink part flow and learn how both robot and 3D sensors can work together to achieve faster, more efficient production. Manufacturing companies must automate their production and distribution systems to stay ahead of the curve, or they face being beaten by competitors who successfully embrace these technologies to leverage lower cost, higher production output, and a more dynamic infrastructure to quickly respond to customer demand for lower volume, specialized products.

IEN Europe: How did the project to integrate the Gocator 3D Snapshot Sensor into a robot originate? Do you plan to partner with other robot designers besides Universal Robots in the future?

T. Arden: This project got started because LMI foresaw the rapid adoption of collaborative robots in the manufacturing space. UR is a strong innovator in that space, and we share their vision to simplify robotic integration through software/integration partnership programs (UR+ program).

In response to the second part of this question, yes definitely, LMI is working with other robot designers to simplify the required integration steps involved with Gocator. As there are no standard robot communication protocols that exist today, LMI has had to be proactive and invest time and effort into adapting our sensors to the different proprietary interfaces developed by each of the robot suppliers. That said, we would love to see a set of standards emerge soon that simplify integration with our sensors!

IEN Europe: At SPS 2018, LMI announced the achievement of the official certification for the integration of the Gocator® 3D snapshot sensors with Universal Robots. What’s your assessment after one year from the certification?

T. Arden: The feedback has been great and it has certainly opened up a lot of possibilities using 3D smart sensors together with a variety of robot applications. We would love to see other robot manufacturers create 3rd party programs like UR+!

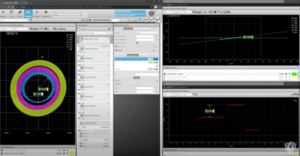

IEN Europe: How does 3D robot vision guidance work? In which cases a robot needs to have ''good eyes'' to perform its tasks?

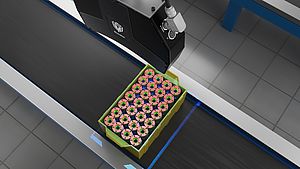

T. Arden: Probably the most common vision guided robotics (VGR) application is pick and place, where a sensor is mounted over a work area in which the robot carries out pick and place movement (e.g., transferring parts from a conveyor to a box).

Another common VGR application is part inspection, where the manipulator on a robot moves a sensor to various features on a workpiece for inspection (e.g., gap and flush on a car body, or hole and stud dimension tolerancing).

Finally, the most sophisticated application of VGR is where the manipulator on a robot picks up a “jig” that contains a number of sensors, and is programmed to pick up a workpiece and guide it for insertion into a larger assembly using sensor feedback (e.g., door panel or windshield insertion).

IEN Europe: What’s the next step in the development of this technology? Do you see further integration – with the robot and the sensor perfectly matching in a single product – possible or desirable in the future?

T. Arden: The next step in the advancement of smart 3D robot vision technology would be to leverage machine/deep learning to achieve more complicated robot manipulation, thereby optimizing completion time and improving the ability to handle complex and/or occluded parts. Integrating the vision sensor inside the robot itself is also a definite possibility at some point down the road. Hopefully we get there first!

IEN Europe: Can you describe LMI Technologies in 3 words?

T. Arden: FactorySmart 3D Solutions!

Sara Ibrahim