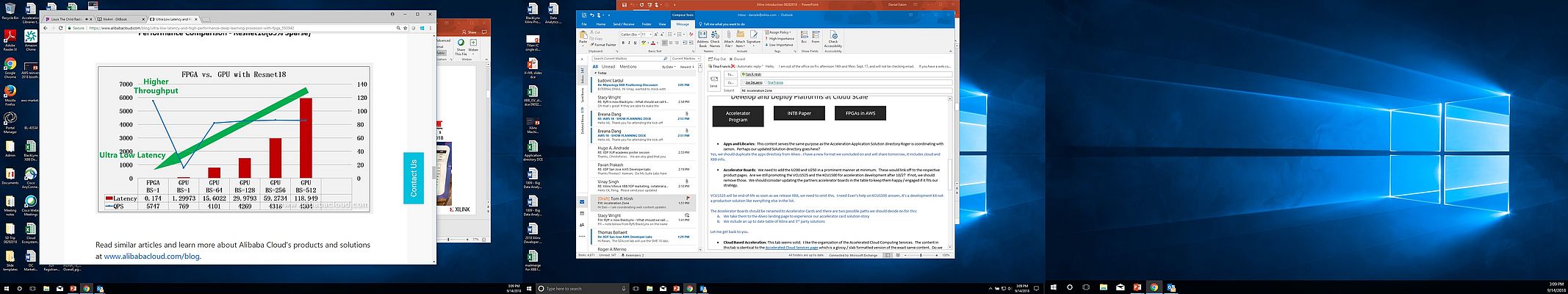

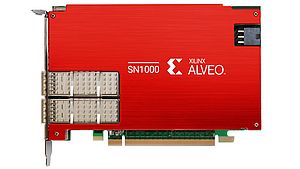

FPGA (Field Programmable Gate Array) by Xilinx is made to configure real-time machine-learning inference. Also known to provide performance acceleration and future flexibility for machine-learning practitioners, it allows to build high performing and inference engines for immediate deployment. It is adaptable for the rapid changes in technology and market demands for machine learning and flexible to adapt in workloads. Real-time Machine-Learning Inference is a new service that leverages natural voice interaction and image recognition to deliver seamless social media or call-center experiences. With an ability to identify patterns in vast quantities of data related many of variables, it is mostly used for deterministic throughput and low latency, achieved simultaneously with practicable size and power constraints to move data efficiently in and out.

Includes flexible memory hierarchy and adaptable high-bandwidth features

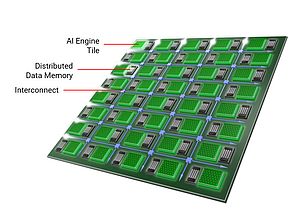

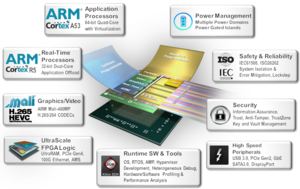

Adaptable and flexible, FPGA integrates optimized compute tiles, distributed local memory, non-blocking shared interconnects and a GPU-based engine with rigid interconnect structures. Used to execute real-time calculations, with low latency with a foundation to build a Deep Learning Processor for image recognition and analysis, it is capable to establish hardware architecture to accelerate AI workloads. Moreover, an ecosystem of resources has been established to accelerate AI workloads in the Cloud or at the edge. Among the available tools, ML-suite takes care of compiling the neural network to run in Xilinx FPGA hardware, the neutral networks generated by common machine-learning frameworks including TensorFlow, Caffe, MxNet and others. It also includes a Python API for easy interaction with ML-Suite, and contains a quantizer tool that convers it to a fixed-point equivalent, part of a set of middleware, compilation and optimization tools, and a runtime (xfDNN) ensuring the neutral network for the best performance possible.