Industry 4.0 applications generate a huge volume of complex data—big data. The increasing number of sensors and, in general, available data sources are making the virtual view of machines, systems, and processes ever more detailed. This naturally increases the potential for generating added value along the entire value chain. At the same time, however, the question as to how exactly this value can be extracted keeps arising—after all, the systems and architectures for data processing are becoming more and more complex. Only with relevant, high quality, and useful data—smart data—can the associated economic potential be unfolded.

Challenges

Collecting all possible data and storing them in the cloud in the hopes that they will later be evaluated, analyzed, and structured is a widespread, but not particularly effective, approach to extracting value from data. The potential for generating added value from the data remains underused; finding a solution at a later time becomes more complex. A better alternative is to make considerations early on to determine what information is relevant to the application and where in the data flow the information can be extracted. Figuratively speaking, this means refining the data; that is, making smart data out of big data for the entire processing chain. A decision regarding which AI algorithms have a high probability of success for the individual processing steps can be made at the application level. This decision depends on boundary conditions such as the available data, application type, available sensor modalities, and background information about the lower level physical processes.

For the individual processing steps, correct handling and interpretation of the data are extremely important for real added value to be generated from the sensor signals. Depending on the application, it may be difficult to interpret the discrete sensor data correctly and extract the desired information. Temporal behavior often plays a role and has a direct effect on the desired information. In addition, the dependencies between multiple sensors must frequently be accounted for. For complex tasks, simple threshold values and manually determined logic or rules are no longer sufficient.

AI Algorithms

In contrast, data processing by means of AI algorithms enables the automated analysis of complex sensor data. Through this analysis, the desired information and, thus, added value are automatically arrived at from the data along the data processing chain. For model building, which is always a part of an AI algorithm, there are basically two different approaches.

One approach is modeling by means of formulas and explicit relationships between the data and the desired information. These approaches require the availability of physical background information in the form of a mathematical description. These so-called model-based approaches combine the sensor data with this background information to yield a more precise result for the desired information. The most widely known example here is the Kalman filter.

If data, but no background information that could be described in the form of mathematical equations, are available, then so-called data-driven approaches must be chosen. These algorithms extract the desired information directly from the data. They encompass the full range of machine learning methods, including linear regression, neural networks, random forest, and hidden Markov models.

Selection of an AI method often depends on the existing knowledge about the application. If extensive specialized knowledge is available, AI plays a more supporting role and the algorithms used are quite rudimentary. If no expert knowledge exists, the AI algorithms used are much more complex. In many cases, it is the application that defines the hardware and, through this, the limitations for AI algorithms.

Embedded, Edge, or Cloud Implementation

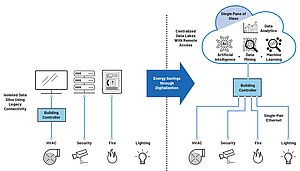

The overall data processing chain with all the algorithms needed in each individual step must be implemented in such a way that the highest possible added value can be generated. Implementation usually occurs at the overall level—from the small sensor with limited computing resources through gateways and edge computers to large cloud computers. It is clear that the algorithms should not only be implemented at one level. Rather, it is typically more advantageous to implement the algorithms as close as possible to the sensor. By doing so, the data are compressed and refined at an early stage and communication and storage costs are reduced. In addition, through early extraction of the essential information from the data, development of global algorithms at the higher levels is less complex. In most cases, algorithms from the streaming analytics area are also useful for avoiding unnecessary storage of data and, thus, high data transfer and storage costs. These algorithms use each data point only once; that is, the complete information is extracted directly, and the data do not need to be stored.

Embedded Platform with AI Algorithms

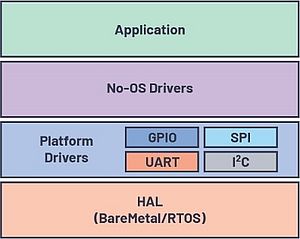

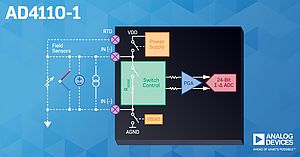

The ARM® Cortex®-M4F processor-based microcontroller ADuCM4050 from ADI is a power saving, integrated microcontroller system with integrated power management, as well as analog and digital peripheral devices for data acquisition, processing, control, and connectivity. All of this makes it a good candidate for local data processing and early refinement of data with state-of-the-art smart AI algorithms.

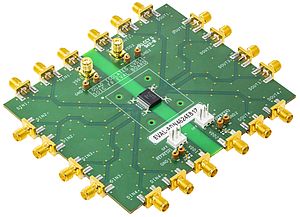

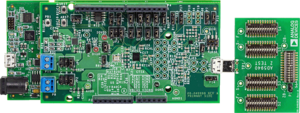

The EV-COG-AD4050LZ is an ultralow power development and evaluation platform for ADI’s complete sensor, microcontroller, and HF transceiver portfolio. The EV-GEAR-MEMS1Z shield was mainly, but not only, designed for evaluation of various MEMS technologies from ADI; for example, the ADXL35x series, including the ADXL355, used in this shield offers superior vibration rectification, long-term repeatability, and low noise performance in a small form factor. The combination of EV-COG-AD4050LZ and EV-GEAR-MEMS1Z can be used for entry into the world of structural health and machine condition monitoring based on vibration, noise, and temperature analysis. Other sensors can also be connected to the COG platform as required so that the AI methods used can deliver a better estimate of the current situation through so-called multisensor data fusion. In this way, various operating and fault conditions can be classified with better granularity and higher probability. Through smart signal processing on the COG platform, big data becomes smart data locally, making it only necessary for the data relevant to the application case to be sent to the edge or the cloud.

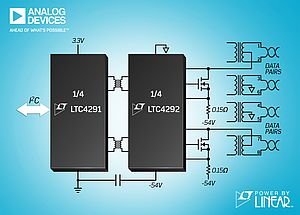

The COG platform contains additional shields for wireless communications. For example, the EV-COG-SMARTMESH1Z combines high reliability and robustness as well as extremely low power consumption with a 6LoWPAN and 802.15.4e communication protocol that addresses a large number of industrial applications. The SmartMesh® IP network is composed of a highly scalable, self-forming multihop mesh of wireless nodes that collect and relay data. A network manager monitors and manages the network performance and security and exchanges data with a host application.

Especially for wireless, battery-operated condition monitoring systems, embedded AI can realize the full added value. Local conversion of sensor data to smart data by the AI algorithms embedded in the ADuCM4050 results in lower data flow and consequently less power consumption than is the case with direct transmission of sensor data to the edge or the cloud.

Applications

The COG development platform, including the AI algorithms developed for it, has a very wide range of applications in the field of monitoring of machines, systems, structures, and processes that extend from simple detection of anomalies to complex fault diagnostics. Through the integrated accelerometers, microphone, and temperature sensor, this enables, for example, monitoring of vibrations and noise from diverse industrial machines and systems. Process states, bearing or stator damage, failure of the control electronics, and even unknown changes in system behavior, for example, due to damage to the electronics, can be detected by embedded AI. If a predictive model is available for certain damages, these damages can even be predicted locally. Through this, maintenance measures can be taken at an early stage and thus unnecessary damage-based failure can be avoided. If no predictive model exists, the COG platform can also help subject matter experts successively learn the behavior of a machine and over time derive a comprehensive model of the machine for predictive maintenance.

Conclusion

Ideally, through corresponding local data analysis, embedded AI algorithms should be able to decide which sensors are relevant for the respective application and which algorithm is the best one for it. This means smart scalability of the platform. At present, it is still the subject matter expert who must find the best algorithm for the respective application, even though the AI algorithms used by us can already be scaled with minimal implementation effort for various applications for machine condition monitoring.

Embedded AI should also make a decision regarding the quality of the data and, if it is inadequate, find and make the optimal settings for the sensors and the entire signal processing. If several different sensor modalities are used for the fusion, the disadvantages of certain sensors and methods can be compensated for by using an AI algorithm. Through this, data quality and system reliability are increased. If a sensor is classified as not or not very relevant to the respective application by the AI algorithm, its data flow can be accordingly throttled.

The open COG platform from ADI contains a freely available software development kit and numerous example projects for hardware and software for accelerating prototype creation, facilitating development, and realizing original ideas. Through the multisensor data fusion (EV-GEAR-MEMS1Z) and embedded AI (EV-COG-AD4050LZ), a robust and reliable wireless meshed network (SMARTMESH1Z) of smart sensors can be created.

About the Authors

Dzianis Lukashevich is director of platforms and solutions at Analog Devices. His focus is on megatrends, emerging technologies, complete solutions, and new business models that shape the future of industries and transform ADI business in the broad market. Dzianis Lukashevich joined ADI Sales and Marketing in Munich, Germany in 2012. He received his Ph.D. in electrical engineering from Munich University of Technology in 2005 and M.B.A. from Warwick Business School in 2016. He can be reached at dzianis.lukashevich@analog.com.

Felix Sawo received his Master of Science in mechatronics from the Technical University of Ilmenau in 2005 and his Ph.D. in computer science from the Karlsruhe Institute of Technology in 2009. Following his graduation, he worked as a scientist at Fraunhofer Institute of Optronics, System Technologies, and Image Exploitation (IOSB) and developed algorithm and systems for machine diagnosis. Since 2011 he has been working as CEO at Knowtion, which specializes in algorithm development for sensor fusion and automatic data analysis. He can be reached at felix.sawo@knowtion.de.