The use of artificial intelligence (AI) – including machine learning (ML) and deep learning techniques (DL) - is poised to become a transformational force in healthcare. Patients, healthcare service providers, hospitals, medical equipment makers, pharmaceutical companies, professionals, and various stakeholders in the ecosystem all stand to benefit from ML driven tools. From anatomical geometric measurements, to cancer detection, to radiology, surgery, drug discovery and genomics, the possibilities are endless. In these scenarios, ML can lead to increased operational efficiencies, extremely positive outcomes and significant cost reduction.

Regulatory support is also steadily increasing and the US Federal Drug Administration (FDA) is approving more and more ML methods for diagnostic assistance and other applications. The FDA has also created a new regulatory framework for ML based products. This new framework refers to ML techniques as “Software as a Medical Device” (SaMD) and envisions significant benefits to quality and efficiency of care. To support this initiative, the FDA introduced a “predetermined change control plan” in premarket submissions which would include the types of anticipated modifications and the associated methodology to be used to implement those changes in a controlled manner.

The FDA expects commitments from medical device manufacturers on transparency and real-world performance monitoring for SaMD, as well as periodic updates on changes that were implemented as part of the approved pre-specifications and the algorithm change protocol. This framework enables the FDA and the manufacturers to monitor a product from its premarket development to post market performance and allows the regulatory oversight to embrace the iterative improvement power of an SaMD, while assuring patient safety.

Opportunities for ML in healthcare

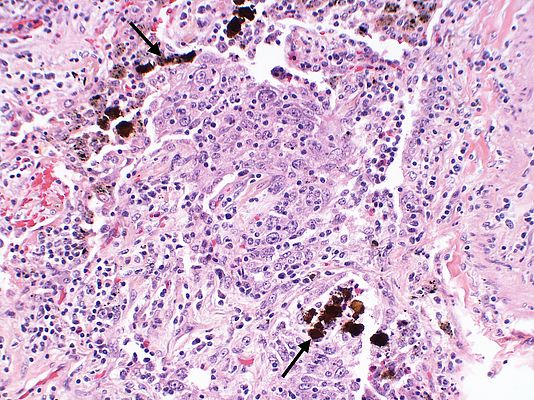

There’s a broad spectrum of ways that ML can be used to solve critical healthcare problems. For example, digital pathology, radiology, dermatology, vascular diagnostics and ophthalmology all use standard image processing techniques.

Chest x-rays are the most common radiological procedure with over 2 billion scans performed worldwide every year, that’s 548,000 scans a day. Such a huge quantity of scans imposes a heavy load on radiologists and taxes the efficiency of the workflow. Often ML, Deep Neural Network (DNN) and Convolutional Neural Networks (CNN) methods outperform radiologists in speed and accuracy, but the expertise of a radiologist is still of paramount importance. However, under stressful conditions during a fast decision-making process, human error rate could be as high as 30%. Aiding the decision-making process with ML methods can improve the quality of result, providing the radiologists and other specialists an additional tool.

Validation of ML are today coming from multiple and very reliable sources. In one study (Reference: Stanford ML Group https://stanfordmlgroup.github.io/projects/chexnet/), a 121-layer CNN was trained to detect pneumonia better than four radiologists. Similarly, in multiple other studies by the National Institute of Health and other organizations, trials around early detection of cancerous pulmonary nodules for lung cancer detection using a DNN model achieved better accuracy than multiple radiologists’ diagnosis.

Though adoption in digital pathology is slower, multiple algorithm-based detections applied in a study of breast cancer compared well, and sometimes fared better than the prognosis from several pathologists. Similarly, RNN/LSTM based approaches on genome annotation predicted better results on whether single nucleotide variants are potentially pathogenic.

Many procedures within radiology, pathology, dermatology, vascular diagnostic and ophthalmology could be on large image sizes, sometimes 5 Megapixels or larger, requiring complex image processing. Also, the ML workflow can be computing and memory intensive. The predominant computation is linear algebra and demands many computations and a multitude of parameters.

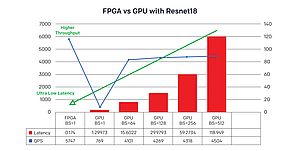

This results in billions of multiply-accumulate (MAC) operations, hundreds of Megabytes of parameter data and requires a multitude of operators and a highly-distributed memory subsystem. So, performing accurate image inferences efficiently for tissue detection or classification using traditional computational methods on PCs and GPUs are inefficient, and healthcare companies are looking for alternate techniques to address this problem.

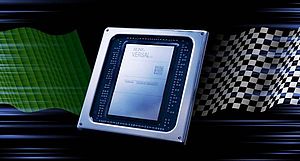

Improved efficiency with ACAP devices

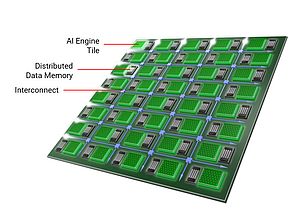

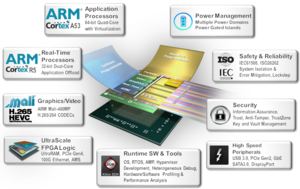

Xilinx technology offers a heterogenous and a highly distributed architecture to solve this problem for healthcare companies. Xilinx Versal™ Adaptive Compute Acceleration Platform (ACAP) family of System-on-Chips (SoCs) with its adaptable Field Programmable Gate Arrays (FPGAs), integrated digital signal processors (DSPs), integrated accelerators for deep learning, SIMD VLIW engines with a highly distributed local memory architecture and multi-processor systems are known for their ability to perform massively parallel signal processing of high-speed data in close to real-time.

Additionally, Versal ACAP has multi-terabit-per-second Network on Chip (NoC) interconnect capability and an advanced AI Engine containing hundreds of tightly integrated VLIW SIMD processors. This means computing capacity can be moved beyond 100 Tera operations per second (TOPS).

These device capabilities dramatically improve the efficiency of how complex healthcare ML algorithms are solved and help to significantly accelerate healthcare applications at the edge, all with less resources, cost and power. With Versal ACAP devices, support for recurrent networks could be inherent due to the simple nature of the architecture and its supporting libraries.

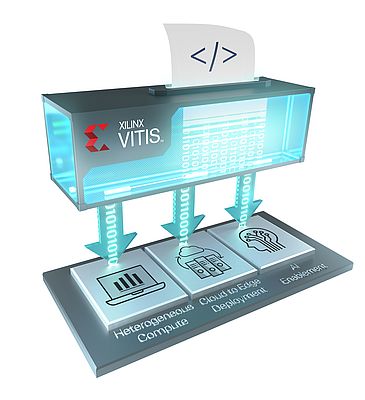

Xilinx has an innovative ecosystem for algorithm and application developers. Unified software platforms, such as Vitis™ for application development and Vitis AI™ for optimising and deploying accelerated ML inference, mean developers can use advanced devices – such as ACAPs - in their projects.

Healthcare and medical device workflows are undergoing major changes. In the future, medical workflows will be ‘Big Data’ enterprises with significantly higher requirements for computational needs, data privacy, security, patient safety and accuracy. Distributed, non-linear, parallel and heterogeneous computing platforms are key for solving and managing this complexity. Xilinx devices like Versal and the Vitis software platform are ideal for delivering the optimized AI architectures of the future.

Subh Bhattacharya