Introduction: Real-time Machine-Learning Inference

Machine learning is the force behind new services that leverage natural voice interaction and image recognition to deliver seamless social media or call-center experiences. Moreover, with their ability to identify patterns or exceptions in vast quantities of data related to large numbers of variables, trained deep-learning neural networks are also transforming the way we go about scientific research, financial planning, running smart-cities, programming industrial robots, and delivering digital business transformation through services such as digital twin and predictive maintenance.

Whether the trained networks are deployed for inference in the Cloud or in embedded systems at the network edge, most users’ expectations call for deterministic throughput and low latency. Achieving both simultaneously, within practicable size and power constraints, requires an efficient, massively parallel compute engine at the heart of a system architected to move data efficiently in and out. This requires features such as a flexible memory hierarchy and adaptable high-bandwidth interconnects.

Contrasting with these demands, the GPU-based engines typically used for training neural networks – which takes time and many teraFLOPS of compute cycles – have rigid interconnect structures and memory hierarchy that are not well suited to real-time inference. Problems such as data replication, cache misses, and blocking commonly occur. A more flexible and scalable architecture is needed to achieve satisfactory inferencing performance.

Leading Projects Leverage Configurability

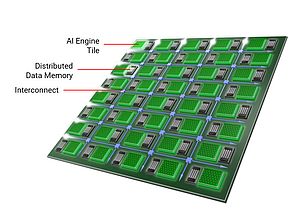

Field Programmable Gate Arrays (FPGAs) that integrate optimized compute tiles, distributed local memory, and adaptable, non-blocking shared interconnects can overcome the traditional limitations to ensure deterministic throughput and low latency. Indeed, as machine-learning workloads become more demanding, cutting-edge machine-learning projects such as Microsoft’s Project BrainWave are using FPGAs to execute real-time calculations cost-effectively and with extremely low latency that has proved to be unachievable using GPUs.

Another advanced machine-learning project, by global compute-services provider Alibaba Cloud, chose FPGAs as the foundation to build a Deep Learning Processor (DLP) for image recognition and analysis. FPGAs enabled the DLP to achieve simultaneous low latency and high performance that the company’s Infrastructure Service Group believes could not have been realized using GPUs. Figure 1 shows results from the team’s analysis with a ResNet-18 deep residual network that shows how the FPGA-based DLP achieves latency of just 0.174 seconds: 86% faster than a comparable GPU case. Throughput measured in Queries Per Second (QPS) is more than seven times higher.

Because machine-learning frameworks tend to generate neural networks based on 32-bit floating-point arithmetic, ML-Suite contains a quantizer tool that converts it to a fixed-point equivalent that is better suited to being implemented in an FPGA. The quantizer is part of a set of middleware, compilation and optimization tools, and a runtime, collectively called xfDNN, which ensure the neural network delivers the best possible performance in FPGA silicon.

The ecosystem also leverages Xilinx’s acquisition of DeePhi Technology by utilizing the DeePhi pruner to remove near-zero weights and compress and simplify network layers. The DeePhi pruner has been shown to increase neural network speed by a factor of 10 and significantly reduce system power consumption without harming overall performance and accuracy.

Reconfigurable Compute for Future Flexibility

In addition to the challenges associated with ensuring the required inferencing performance, developers deploying machine learning must also bear in mind that the entire technological landscape around machine learning and artificial intelligence is changing rapidly; today’s state-of-the-art neural networks could be quickly superseded by newer, faster networks that may not fit well with legacy hardware architectures.

At present, commercial machine-learning applications tend to be focused on image handling and object or feature recognition, which are best handled using convolutional neural networks. This could change in the future as developers leverage the power of machine learning to accelerate tasks such as sorting through strings or analyzing unconnected data.

FPGAs are known to provide the performance acceleration and future flexibility that machine-learning practitioners need; not only to build high-performing and efficient inference engines for immediate deployment, but also to adapt with the rapid changes in both the technology and market demands for machine learning. The challenge is to make the architectural advantages of FPGAs accessible to machine-learning specialists and at the same time help ensure the best performing and most efficient implementation.

Xilinx’s ecosystem has combined state-of-the-art FPGA tools with convenient APIs to let developers take full advantage of the silicon without having to learn the finer points of FPGA design.

By Daniel Eaton, Senior Manager, Strategic Marketing Development at Xilinx