Quick access

Interview: Jean-Yves Parfait (IBM) and Franz Bourlet (Multitel)2 Focus: Digital Transformation3 Focus: AI & Robotics4 AI News5 Interview: Fabrice Baranski - Logpickr6 AI in Manufacturing Operations7 Autonomous Driving to Level 58 Deep Learning Libraries, AI Embedded Edge Computer, OpenCL AI Platform9 Event: Hannover Messe 202010 AI News12 Machine Learning, Deep Learning, Machine Vision13 Event: AI Convention Europe14 Companies in this issue16 Masthead17''We Can Train Machine Learning and Deep Learning Models up to 10 Times Faster''

Created in 1995 by the Engineering Faculty of the University of Mons, the research innovation center of Multitel has adopted IBM® Watson® Machine Learning Accelerator to harness the power of deep learning (DL)

Artificial Intelligence

Created in 1995 by the Engineering Faculty of the University of Mons, the research innovation center of Multitel has adopted IBM® Watson® Machine Learning Accelerator to harness the power of deep learning (DL) and tackle some of the biggest challenges of our time. Jean-Yves Parfait, AI Team Leader at Multitel and Franz Bourlet, Power Systems Expert at IBM, explain why ML is an added-value for industrial players.

IEN Europe: How long has IBM researched in AI and Machine Learning?

F. Bourlet, IBM: Arthur Samuel is one of the pioneers of machine learning. While working at IBM, Arthur Samuel wrote a Checker’s playing program on IBM’s first commercial computer 701. IBM Research has been exploring artificial intelligence and machine learning technologies and techniques for decades. We believe AI will transform the world in dramatic ways in the coming years - and we’re advancing the field through our portfolio of research focused on three areas: Advancing AI, Scaling AI, and Trusting AI. We’re also working to accelerate AI research through collaboration with likeminded institutions and individuals to push the boundaries of AI faster - for the benefit of industry and society.

IEN Europe: Who are your main partners when it comes to AI and ML?

F. Bourlet, IBM: Founded in 2017, the MITIBM Watson AI Lab is a unique academic / corporate partnership to spur the evolution and universal adoption of AI. The MIT-IBM Watson AI Lab focuses research on healthcare, security, and finance using technologies such as the IBM Cloud, AI platform, blockchain and quantum to deliver the research to industries.

IEN Europe: Speaking about ML, what’s the main added value for industrial players?

J-Y. Parfait, Multitel: Industrial players may find value in machine learning in different ways: 1) create new business opportunities through innovative services, 2) personalize the relationship with the customer 3) enhance the efficiency of internal processes (administrative processes, energy efficiency, foster innovation like in drug or material discovery,...).

IEN Europe: Which challenges in the industrial space could ML help solve?

J-Y. Parfait, Multitel: ML best complement the humans in the following cases: 1) the number of observable patterns, 2) processing speed, 3) the number of decision variables are beyond what a human can handle. Some big industrial challenges ML is likely to help solving are: production flow analysis/scheduling (potentially based on IoT data), predictive maintenance, quality control, automation of administrative processes.

IEN Europe: Could you give practical examples?

J-Y. Parfait, Multitel: Some examples inspired from Multitel’ past and ongoing projects:

- Production flow analysis/scheduling: Impact analysis of production line capacity upgrade on the current company internal processes and human resources, impact of changes in plant organization on productivity...

- Predictive maintenance: vibration sensors combined with ML can help to estimate the remaining time of life of production assets allowing plant managers to schedule maintenance operation in an economical way.

- Quality control: 3D laser-camera technology combined with ML can be used to detect defaults in products on very-high throughput production lines.

- NLP and chatbots can be used by operators to browse product technical documentation.

IEN Europe: How can your IBM® Watson® Machine Learning Accelerator harness the power of deep learning?

J-Y. Parfait, Multitel: Thanks to IBM Watson Machine Learning Accelerator we can now train machine learning and deep learning models up to 10 times faster—reducing the total training time from weeks to just days. Training our machine learning algorithms faster frees up more time for us to test and refine these models, which in turn enables us to speed up downstream development.

Sara Ibrahim

Advantech To Drive the Digital Transition with 5G, AI and IoT

At its Industrial IoT World Partner Conference in Taiwan, from December 5 to 7, Advantech explained and demonstrated its strategy to drive the digital transition through 5G, AI and seamless IT-OT integration

Artificial Intelligence, Industry 4.0

Advantech outlined and demonstrated at its Industrial IoT World Partner Conference - held at the company's headquarters is Taipei, Taiwan, from December 5th to 7th - how the digital transformation will deeply change business models. In this process, AI and computer vision are meaningful to accelerate problem solving for specific scenarios.

Creating ecosystems by combining different technologies on powerful cloud platforms is at the core of the strategy presented. ''About a year ago, Advantech held its IoT Co-Creation Summit in Suzhou, Shanghai, which attracted more 6000 participants eager to co-create the future of the IoT world. Now, we have more than 450 people joining our event here in Taipei from more than 40 countries,'' said Linda Tsai, President of Industrial IoT at Advantech.

The aim of the conference was also to show the evolution of the co-creation strategy and the main innovations released in collaboration with partners. ''We have 16 partners demonstrating their solutions co-created with Advantech,'' Linda Tsai added.

Partnering for Success

Advantech explained that its co-creation strategy is bearing fruit. Partnership is the key to success in the medium and long term, as it brings mutual commitment and a culture of mindsharing. This in turn generates engagement and co-involvement in business developments, while creating new values and growing trust, all elements of a strong business success. ''We go alone, we go very fast. But only if we go together we will go far,'' stated LindaTsai at the end of her intervention.

KC Liu, CEO at Advantech showed how the company will accomplish the mission of co-creating the future of industrial world in the IoT world in 2020 and beyond. According to the AIoT overall industry value chain and market share, the traditional business of edge computing and edge devices takes 25% of overall IoT market; communication devices - including wires, wireless, switches, routers etc. - take another 10%, as much as software platforms, such as Advantech's the WISE-Paas solution.

''The rest and the biggest part (55%) will belong to domain solutions and industrial apps, therefore to our customers, which are domain solutions integrators. At Advantech, our traditional businesses for more than 30 years has been edge computing and industrial communication, or what we call the 'Phase I business' '' explained KC Liu. Phase I and Phase II (the WISE-Paas) together were Advantech's core business.

To penetrate Phase III, the domain solutions part, Advantech is leveraging partner co-creation and joint ventures. ''If we want to get to Phase III, we need to overcome the obstacle of the so called 'Industral App decoupling', to be able to get to the stage 2.0 of the WisePaas. This will happen in 2020. The next breakthrough, will be closed platform sharing 3.0. After that, we can enable a real big growth of domain innovation stage 4.0. In four or five year, we will enjoy the big boom of IoT,'' KC Liu forecasts.

New portfolio of solutions to face the wave of growth

Software decoupling is a really important concept that will enable an enourmous growth through the integration of all the apps in a single software solution. Since advanced digital transformation is a must, Advantech unveiled that they will announce a new I.Apps portfolio on WISE-Paas Marketplace 2.0 to keep up with the expectation of growth coming from decoupling. Among the main drivers of innovations, besides IoT, we find AI and 5G.

5G and AI are enabler of IIoT

For Linda Tsai ''the integration of 5G and AI are essential to make IIoT happen. Applications are already there, we just need to be prepared for the new technology coming. That's why Advantech presented its high-security 5G-ready network management solutions, the ICT-4000, that guarantees low latency, high bandwidth, high capacity and low power.

The newly introduced xNavi software series also goes in this direction. This edge computing software enables machine vision inspection, production traceability, equipment monitoring, and predictive maintenance, and supports most of Advantech's products. By bringing AI to the edge, high computer power and industrial efficiency are guaranteed.

Europe as a one of the key markets

Advantech wishes to strengthen its position in Europe, considered a strategic market in terms of innovation and potential growth. ''With its variety cultures, countries and languages, Europe is the cradle of innovation. Working in Europe with prominent pioneers of industrial automation like Siemens, Schneider Electric and Bosch gives us the possibility not only to consolidate our global presence but also to enhance our know-how and better understand customers’ requirements and expectations,’’ claimed Chaney Ho, Co-founder, Executive Director of Board at Advantech.

At this stage of Advantech’s expansion, it’s deemed essential to improve the company’s global footprint. The US is currently the biggest market for Advantech, followed by China and Europe, where the growth is expected to be around 4 percentage points in the next five years, as a result of the investments made in engineering projects and localization. According to Mr. Ho: ''In our company culture, we believe that diversity creates innovation. And Europe is the best melting-pot to open new perspectives and deliver progress.’’

Sara Ibrahim

What’s in the Future of Robotics?

Industrial robotics is growing at a fast pace, even though a big question mark hangs over the consequences of this massive adoption. To anticipate what the future of robotics will look like, we have analyzed some data and current trends

Automation

While the world witnesses the disclosure of the first ''living-robot'', which is able to swim around humans and to self-heal, and the CEO of Sutton Trust, Lee Elliot Major, declares that "the rise of robotics is taking away the traditional ladders of opportunity in the workplace," we continue to see the industrial robotics market fast-growing. Even despite the general downturn, especially in the automotive sector, and the commercial war between China and the USA.

The latest report of the International Federation of Robotics estimates that more than 2.4 million industrial robots (the exact number is 2.439.543) are currently in use in factories worldwide. The report shows a record also in terms of global sales, with values hitting 16.5 billion USD. According to Oxford Economics, the global stock of robots will multiply at an incredible pace in the next 20 years, achieving 20 million units by 2030, the majority of which (14 million) will cover just the Chinese market.

If these figures turn to be right, the consequences of this booming technology can be unpredictable and out of our control. It is sure that the employment market is set to change and we can easily imagine that there will be a massive impact on manufacturing jobs.

All technologies in one robot

But how could we define industrial robotics? For Chetan Khona, Director of Industrial, Vision, Healthcare & Science Markets at Xilinx, robotics is a combination of all technologies that are key to the operation of an industrial system. With robotics you can get multiple functions – such as industrial control, communication, vision, AI, machine learning, HMI, functional safety, cybersecurity – all in the same system. ''In robotics, all these technologies are coming together, everything has to be rolled into a single robot, whereas if you just take a piece of an industrial automation system you don’t have the same conjunction of different technologies at the same time,’’ explained Chetan Khona.

For Xilinx, a US semiconductor company that develops FPGAs, programmable SoCs, and the ACAP, robotics means not only traditional robotics but also collaborative robotics and automated guided vehicles for factory floors and warehouses. These are three of Xilinx’s major robotics applications in the industrial sector.''Our solutions are used by the most important players of robotics around the world, especially in critical applications,'' Mr. Khona added.

An ascendant growth

The high complexity of industrial robotics has not stopped its growth from 1960 until now. The great variety of applications, sizes, machines and technologies can outline the extent of this complexity. Currently, the robotics sector is growing at a fast pace. According to a McKinsey report released in July 2019, the total market for robot systems – which takes into consideration auxiliary hardware, software and programming, installation, robot arm, and accessories – accounted for 48 billion USD in 2017.

Investment expectations are also high, especially in the automotive industry: already in 2018, McKinsey’s Global Robotics Survey highlighted that 88% of the 85 OEMs interviewed anticipated an increase in investments in the sector. As for the type of robots installed, traditional robots, AGVs and cells are the most adopted technology, but the installation rate of collaborative robots is growing, especially in the electronics industry.

Cobots on the rise

With its built-in safety system, the ability to interact with workers, and the simplicity of connection and run, collaborative robots are becoming more and more popular in the industrial space. This explains why a strong growth is expected, with more than 100,000 units estimated to be shipped in 2020. ''The robotics sector is growing in two directions: on the application side and on the collaborative robot side. Collaborative robots are the fastest-growing market segment with a significant growth curve above the average rate.

This is because there are a number of tasks that are variable in nature and cannot be given completely over to robots,’’ said Chetan Khona. Cobots can be better tasked with works that require a high degree of customization and accelerate them, helping humans be more efficient.

For Xilinx, the impact of the collaborative state in the industrial environment will be prominent in the future and will create a shift in the market as employees are upskilled and their responsibilities pivoted, for example managing robots on the production line.

Expected evolution of industrial robot communication protocols

When addressing industrial robotics, it is interesting to analyze how robots talk to each other. Currently, there is not a standard for robot communication. ''Before, it was just a matter of making the robot controller communicate with other parts of the network and send messages in the inherent electronics of the robot. EtherCAT was perfectly fine for that. But in the future, we believe that the communication will use more distributed technology,’’ Mr. Khona illustrated.

This trend has already resulted in the increased adoption of Data Distribution Service (DDS) in robotics. DDS is an open-source connectivity standard that is ideal for critical IoT applications since it provides low-latency data connectivity, extreme reliability, and a scalable architecture. It is a way of distributing communication.

''DDS is a sort of distributed database where every element has some piece of important information in a decentralized way. DDS has become more integrated into collaborative robots with the introduction of Robot Operating System (ROS) 2.0,'' explained Mr. Khona. One of the things that Xilinx has contributed to clear through customs for the next generation of robotic systems is running ROS, in conjunction with DDS, over Time Sensitive Networking (TSN). ''Having this deterministic networking as part of robot systems is something that we see as a major trend moving forward.''

Sara Ibrahim

''Manufacturing Companies Must Automate their Production to Stay Ahead of the Curve''

As the adoption of collaborative robots is rapidly growing in the manufacturing industry, we have interviewed Terry Arden, CEO at LMI Technology

Vision & Identification

Having integrated its 3D snapshot sensor into UR cobots, the Canadian company LMI Technologies is currently working to simplify robotic integration and set the standard for successful factory automation.

IEN Europe: The use of robots in the manufacturing industry is massively growing everywhere. What new challenges is factory automation bringing to manufacturing companies? And what does it change for end users?

T. Arden: Integrating robots into a manufacturing line challenges process control engineers to rethink part flow and learn how both robot and 3D sensors can work together to achieve faster, more efficient production. Manufacturing companies must automate their production and distribution systems to stay ahead of the curve, or they face being beaten by competitors who successfully embrace these technologies to leverage lower cost, higher production output, and a more dynamic infrastructure to quickly respond to customer demand for lower volume, specialized products.

IEN Europe: How did the project to integrate the Gocator 3D Snapshot Sensor into a robot originate? Do you plan to partner with other robot designers besides Universal Robots in the future?

T. Arden: This project got started because LMI foresaw the rapid adoption of collaborative robots in the manufacturing space. UR is a strong innovator in that space, and we share their vision to simplify robotic integration through software/integration partnership programs (UR+ program).

In response to the second part of this question, yes definitely, LMI is working with other robot designers to simplify the required integration steps involved with Gocator. As there are no standard robot communication protocols that exist today, LMI has had to be proactive and invest time and effort into adapting our sensors to the different proprietary interfaces developed by each of the robot suppliers. That said, we would love to see a set of standards emerge soon that simplify integration with our sensors!

IEN Europe: At SPS 2018, LMI announced the achievement of the official certification for the integration of the Gocator® 3D snapshot sensors with Universal Robots. What’s your assessment after one year from the certification?

T. Arden: The feedback has been great and it has certainly opened up a lot of possibilities using 3D smart sensors together with a variety of robot applications. We would love to see other robot manufacturers create 3rd party programs like UR+!

IEN Europe: How does 3D robot vision guidance work? In which cases a robot needs to have ''good eyes'' to perform its tasks?

T. Arden: Probably the most common vision guided robotics (VGR) application is pick and place, where a sensor is mounted over a work area in which the robot carries out pick and place movement (e.g., transferring parts from a conveyor to a box).

Another common VGR application is part inspection, where the manipulator on a robot moves a sensor to various features on a workpiece for inspection (e.g., gap and flush on a car body, or hole and stud dimension tolerancing).

Finally, the most sophisticated application of VGR is where the manipulator on a robot picks up a “jig” that contains a number of sensors, and is programmed to pick up a workpiece and guide it for insertion into a larger assembly using sensor feedback (e.g., door panel or windshield insertion).

IEN Europe: What’s the next step in the development of this technology? Do you see further integration – with the robot and the sensor perfectly matching in a single product – possible or desirable in the future?

T. Arden: The next step in the advancement of smart 3D robot vision technology would be to leverage machine/deep learning to achieve more complicated robot manipulation, thereby optimizing completion time and improving the ability to handle complex and/or occluded parts. Integrating the vision sensor inside the robot itself is also a definite possibility at some point down the road. Hopefully we get there first!

IEN Europe: Can you describe LMI Technologies in 3 words?

T. Arden: FactorySmart 3D Solutions!

Sara Ibrahim

Automotive Industry to Invest More in Smart Factories

A study from the Capgemini Research Institute highlights the fact that automotive industry is planning to make 44% of its factories smart in the next five years

Artificial Intelligence, Automation

In a new research report, the Capgemini Research Institute shed light on the acceleration of the investment for smart factories from the automotive industry. Among this study, the automotive industry is planning to make 44% of its factories smart in the next five years, but it will also have to invest in skills and systems to take full advantage. The automotive industry is motoring ahead of its peers in terms of smart factory adoption and is set to increase investment by over 60% in the next three years, resulting in productivity gains of more than $160bn.

The “How automotive organizations can maximize the smart factory potential” report tracks deployment of smart factories by automotive Original Equipment Manufacturers (OEMs) and suppliers in 2019, compared to equivalent research from 2017/18. It found that both projected investment levels and productivity gains relating to smart factories are significant, but that only a minority of automotive firms are fully ready to take advantage through deployment at scale. Capgemini’s analysis ranks 72% of automotive firms as ‘novices’, compared to just 10% being ‘frontrunners’ who are ready to realize the full potential of smart factories at scale (18% of OEMs were frontrunners vs. 8% of suppliers).

Automotive industry has exceeded previous expectations on smart factory development

In the last 18-24 months, 30% of factories in the industry have been made smart; ahead of the 24% that executives said they had planned in 2017/18. Capgemini also found that almost half (48%) of executives believe they are ‘making good or better than expected’ progress on their smart factory roadmap, compared to 38% who responded to this same question 18 months earlier.

“There are three primary reasons why we took up the smart factory initiative,” says Dr. Seshu Bhagavatula, President, New Technologies and Business Initiatives at Ashok Leyland, one of the largest heavy vehicle manufacturers in India. “The first is to improve the productivity of our old factories through modernizing and digitizing their operations. The second is to deal with the quality issues that are difficult for human beings to detect. And the third is to incorporate made-to-order or mass-customization capabilities. All these formed part of a massive internal strategic program called Modular Business Program.”

Automotive is moving faster than other industries

In the next five years, the automotive industry has aggressive plans to convert further 44% of its factories into smart facilities, followed by 42% in discrete manufacturing, 41% in process industries, 40% in power, energy and utilities, and 37% in consumer products. This aggressive expansion is reflected in a 62% increase in the proportion of overall revenue the industry plans to invest in smart factories. Automotive companies will be investing into a combination of greenfield and brownfield facilities: 44% intend to take a hybrid approach, 31% to build brownfield factories (estimated to cost $4mn-$7.4mn per facility for a top-ten OEM), and 25% greenfield (at a cost of $1bn-$1.3bn per factory) – considerably more expensive, but easier to make efficient by design.

Investment in smart factories reflects a huge productivity opportunity

By 2023, the research estimates that smart factories could have delivered a productivity gain in the region of $135bn (average scenario) to $167bn (optimistic scenario): an annual improvement of 2.8%-4.4%, and an overall productivity gain of 15.1%-24.1% for the industry as a whole by 2023. The potential for these gains is already being demonstrated by companies such as Mercedes-Benz Cars, which has achieved a fourfold reduction in rejection rate on some key components through its use of advanced data analytics to create self-learning and self-optimizing production systems.

“Automotive companies have progressed better on their smart factory initiatives in the last two years and clearly plan to increase the pace of adoption from here onwards. Today, auto OEMs and suppliers are committing significant investment, and by 2023, we can expect these investments to pay off with organizations realizing annual productivity gains of at least 2.8% to 4.4%,” said Markus Winkler, Global Head of the Automotive Sector at Capgemini. “However to get there, auto firms must address gaps in the talent pool, technology strategy and organizational commitment to deploy at scale, and realize the full benefits offered by smart factories. While smart factories are a critical part of the Intelligent Industry, OEMs and suppliers must also focus on smart operations including smart asset management, smart supply chain and service management to completely unlock the potential of the various technologies.”

Gains are yet to be realized

While the industry has set stiff KPI targets for its smart factories, these are a long way from being fulfilled: of the 35% productivity improvement target, just 15% has been achieved so far, and there has been only an 11% improvement in Overall Equipment Effectiveness (OEE) and reduced stocks/WIP, compared to targets of 38% and 37% respectively. This demonstrates that many initiatives are yet to be scaled fully.

For automotive organizations to deploy smart factories at scale, the new report recommends them to set and commit to a vision, work hard to integrate IT solutions, and strengthen IT-OT convergence. Furthermore, they would need to build a talent base for the future and nurture a culture of data-driven operations.

"Everyone can become a process miner"

Fabrice Baranski, CEO at Logpickr explains us what is process mining and how it intertwines with Artificial Intelligence, creating new possibilities in regard with prediction models

Artificial Intelligence, Vision & Identification

Fabrice Baranski has been CEO of Logpickr since 2017. Co-founder of this process mining software company, he draws on five years of experience in R & D within the Orange group. In terms of process mining, Logpickr is the only French player. Their innovation lies in the implementation of AI in this software. Their goal is to become a reference player in process mining in France but also internationally. Logpickr is listed by Gartner as a Representative Vendor in the process mining field. The software is available in English and French versions.

IEN Europe: Can you introduce your company?

Fabrice Baranski: Logpickr is a young company created in 2017. It is a pioneer in process mining in France. We create and edit software solutions in process mining that innovate in relation to the market. Our software combines process mining with Artificial Intelligence: this is what differentiates us. We rely on more than 7 years of R & D (5 years in the Orange group). Logpickr is already listed as a Representative Vendor in Process Mining field by Gartner.

IEN Europe: What is your definition of process mining?

F. Baranski: By making an analogy with medicine: companies pilot their activities, operations, in the manner of what a doctor does by controlling the temperature, with a thermometer. Process mining then plays the role of scanner in the sense that it will allow to understand in detail how operations and processes are actually performed and not supposedly. The process mining will go into detail to understand precisely the processes. This is a big gap compared to what already exists in the process analysis, where audits are done manually. The advantage of this technology is that the audit is done very quickly, simply by analysing the existing data in the information systems (IS) of companies.

IEN Europe: How to collect resources? In what form do you recover them?

F. Baranski: We have built a whole series of interfaces to recover the data where it is, as it is. Research at Orange was based on an evolving IS with existing applications and new applications. With third-party and internal tools, we are able to recover the data in ERP, in databases, in files, to retrieve information that we need to understand the processes. Regarding this we need "event log" or data on processes in the form of computer traces. I take an example: a bank and its bank credits. The computer traces are found in different applications. This information should contain three characteristics: What activity was done? When? For which folder? With these three pieces of information that can come from several data sources, the software will be able to identify the processes. The difference from Logpickr is that the software will add all the business data related to the process. These data are the amount of the title credit, which intervened, which customer, etc. There is no limit to the data that can be processed. This enriches the analysis.

IEN Europe: In which framework does one apply process mining according to Logpickr?

F. Baranski: As part of continuous improvement, process improvement or lean management. There are several application frameworks: the first, when one wishes to optimize the processes of a society. There is a saying that we can improve only what we know well. To know each other well, it requires an automatic audit using our software. The great interest is that our audit is done very quickly and that it is very easy to identify where the needs for improvement are. The second category is the automation of processes or RPA. These dynamics require an audit beforehand to identify how to better automate, and how to optimize this automation. The third interest is the customer experience. From our point of view, the customer experience is a process like any other. With Logpickr, we are able to understand the customer experience to get a real view of the situation in real time. The last point is anticipation and prediction, to anticipate the failures on the processes. Thanks to AI, Logpickr can create prediction models and alert in case of failure such as non-compliance with deadlines on current files or other criteria that can be defined.

IEN Europe: What is the link between process identification and AI?

F. Baranski: Process mining is a way of having new variables, new data, which will be used to feed prediction models. As Logpickr has built an innovative process mining, we achieve more powerful results in terms of prediction. We will use this material to build predictive models and explain the root causes of process variation and failure. Our niche is to combine our innovative process mining with AI, around prediction features but also features to facilitate analysis. We want to make sure that everyone can become a process miner. Software and AI are at the service of human to facilitate analysis.

IEN Europe: What works best in process mining?

F. Baranski: What works particularly well is what is called in our field the "process discovery": the automatic discovery of what is actually happening in the field. In this way it will be possible to detect more or less effective variants, the way you run your business. With our innovative process mining we have the ability to reflect a reality that is much closer to the field. We also bring innovation through new models of prediction on the field, which compared to the benchmark are far superior to other models of prediction, even in the academic world.

IEN Europe: What are the future implications of AI in process mining?

F. Baranski: We work a lot with academic personalities of the domain. We are always looking for ease of use and automatic optimization recommendations. The blocking points that may exist in the process are automatically corrected by AI, thus we accelerate process automation and optimization.

IEN Europe: To what extent can the involvement of AI in process mining have a positive impact on the industry?

F. Baranski: The industrial company using process mining will see and permanently identify what is working well and less well. Thus, to gain precious time in the analysis of the problems, or in the optimization of the use of its resources compared to its objectives, the customer satisfaction, or the legality of the product for example. Process mining, in relation to data mining, looks at behavior: how a process has been developed in order to achieve a service. We are in a constant improvement of the performance because the analysis is permanently available. We try to reach the optimum of the industrial performance.

IEN Europe: What can be improved in process mining?

F. Baranski: There are two aspects. AI has brought interesting innovations in the process intelligence to facilitate its use. The other point is that Logpickr revolutionizes process mining with a new way of seeing it especially on areas that are not well treated at present, such as in complex processes. The complex processes inherent in big companies are not well treated with the current tools. Logpickr combined with AI gives increased performance. AI will bring benefits in ease of use, ease of analysis so that the human really focuses on its innovation, its ability to have new ideas, its organization, its processes, its management customers and more, to understand what is more effective.

I still do not believe in an AI capable of proposing changes of organization. From the outset at Orange we had to deal with complex cases or very bulky ones. It was noticed that it did not work well with the available tools and other current OpenSource tools. AI enriches us through the invention of new ways to achieve better results.

IEN Europe: How do you fit into the French AI landscape?

F. Baranski: We have an atypical profile in the AI landscape. We are the only process mining company in France. We invent new ways to use AI in the fact that we introduce the results of process mining in AI. Existing algorithms are used in another way with strong innovation at this level. We look at what is done in other areas to see if we can apply it in the case of Logpickr, and how that will bring gains.

IEN Europe: What is your message for users or future users of process mining?

F. Baranski: Process Mining makes it possible to increase efficiency and productivity, where current solutions (lean management, six sigma, process automation) reach their limits. Applying in a systemic and recurring way, you gain in transparency on your organization, but also in operational quality and therefore in customer satisfaction. It is a tool that has no limits as to its scope of application and is essential and unavoidable to manage and optimize all the processes and operations of your company.

Anis Zenadji

AI-based Predictive Maintenance can Significantly Increase Robot Availability

Outdated maintenance procedures waste valuable resources and can impact on safety. Modern predictive techniques however reduce the costs of both scheduled and unscheduled downtime and are a key contributor to increased machine availability

Artificial Intelligence

A recent Frost & Sullivan report estimated the cost of unplanned downtime for industrial manufacturers in excess of €45bn. It noted that 42% of unplanned downtime was primarily caused by factory equipment failures. The cost of unplanned downtime extends much further than lost production. Unplanned outages force a reactionary and costly approach to maintenance, repair and equipment replacement in an effort to get the line up and running as quickly as possible. Predictive maintenance, in contrast, provides an early warning of failing or deteriorating parts. Having this information gives service teams the opportunity to perform any maintenance well in advance of any actual failure, so reducing unplanned downtime.

Modern predictive maintenance technologies are becoming ever smarter. Indeed, the same Frost & Sullivan report estimated that these technologies could drive productivity gains of 66% among maintenance teams.

Predictive maintenance as game-changer

We are already seeing the integration of predictive maintenance tools such as Mitsubishi Electric’s Smart Condition Monitoring (SCM) technology, which provides early warning of impending failure on rotating machinery. But there is more to come, with artificial intelligence (AI) offering the potential to take predictive maintenance to a new level.

Applying AI-based machine learning algorithms drives even greater insights into the machine’s operation, not simply comparing current performance with pre-established baselines but going further to base decisions on real-time data and past trends.

AI-based predictive maintenance in action

A good example of AI-based predictive maintenance in action is the application of Mitsubishi Electric’s MELFA Smart Plus to its latest generation of industrial robots. This integrated technology precisely monitors the length of time each of the main robot components is in motion; and derives maintenance schedules according to actual operating conditions. It also offers simulation capabilities to predict the robot lifetime during the design phase of the application and to estimate the annual maintenance costs. This gives engineers the opportunity to modify the robot’s operation to extend the lifecycle.

MELFA RV-8CRL industrial robot

Mitsubishi Electric’s MELFA RV-8CRL will be the latest industrial robot to benefit from the Smart Plus technology. This robot has been built from the outset to minimise maintenance requirements. It incorporates features such as a beltless coaxial drive mechanism for reduced wear. It also uses the latest servomotors from Mitsubishi Electric which eliminates the need for batteries to back-up the robot’s internal encoder. Combining these advanced design features with AI-based predictive maintenance can significantly increase availability by preventing unscheduled downtime.

There is still much more to come from AI-based predictive maintenance, and Mitsubishi Electric is at the forefront of these technologies, enabling companies to derive the maximum gains from artificial intelligence.

By Oliver Giertz, Product Manager EMEA for Servo/Motion and Robotics at Mitsubishi Electric Europe

AI in Manufacturing Report

The Capgemini Research Institute’s report outlines current AI adoption in the manufacturing sector

Artificial Intelligence

The Capgemini Research Institute released a report titled "Scaling AI in Manufacturing Operations: Practictioners' Perspective", that details the current state-of-the-art of the adoption of Artificial Intelligence (AI) in the manufacturing sector. 300 leading global manufacturers in the automotive, industrial manufacturing, consumer products, and aerospace and defense sectors were analyzed and 30 senior industry executives involved in AI projects were interviewed. The main findings are as follows:

Facts and figures

Europe reasserts its innovativeness and ability to think forward: in fact, 51% of its top manufacturers currently implement AI projects. This rate is even higher in Germany, where 69% of manufacturers utilize AI applications. Japan follows Europe with a 30% AI adoption rate for its leading manufacturers, narrowly followed by the USA (28%), while China reports the lowest rate of AI implementation, ranging around only 11%.

AI in the value chain

The implementation of AI shows clear added value and benefits throughout the entire value chain.

Out of the 22 use cases analyzed, three were identified as the best use cases and as the possible starting points for other organizations to implement AI projects: intelligent maintenance, product quality inspection, and demand planning.

For example, General Motors uses a computer vision system to detect component failure and prevent downtime, Bridgestone performs tire quality control through AI, which resulted in a 15% improvement over traditional methods, while Danone was able to use machine learning to predict demand, reducing the loss of sales by 30%.

These applications are particularly suitable as they are relatively easy to implement as both relevant data and AI know-how and/or standardized solutions are already available. Furthermore, all the three cases would improve the visibility and the explainability of the processes, which would facilitate AI adoption by the operational teams and foster a systematic mindset shift towards AI in the plant and across the workforce.

Scaling AI adoption

While successful AI implementation in manufacturing depends on several factors, the most important factor according to the report is the successful deployment of AI prototypes in live engineering environments, including the automation of the collection of real-time data and the prototypes’ integration with legacy IT and IIoT systems.

It is also important for manufacturers to design a data governance framework and establish a central data & AI platform to store and analyze data using AI, making it thus available for issue-specific AI applications. This will also contribute to the development of AI, data science and data engineering expertise directly related to manufacturing applications. After this foundational step, AI applications can be effectively implemented and shared across the entire manufacturing network, including multiple sites and factories.

Read the full report here.

Enabling Level 5 Autonomous Driving

Level 5 autonomous driving represents the highest achievement in terms of driving automation. Enabling in-vehicle AI and deep learning with High Performance Embedded Computing (HPEC) installed on vehicles can guarantee cutting edge performance and great r

Artificial Intelligence

A Level 5 vehicle does not require any control from human beings: steering wheel, brake and acceleration pedals are completely removed, and the vehicle determines every action in all conditions.

The Automotive Industry alone is spending billions of dollars in investments for developing autonomous driving technologies, and numerous other vertical markets, such as Defense, are also active on similar roadmaps.

The challenges of Level 5 autonomous driving

All these players are encountering a number of new challenges that span across many disciplines and technologies. In this article we will focus on hardware requirements that are completely novel and how to meet them.

Performance

Sensors, LIDARS and other technologies supporting autonomous driving generate unprecedented amounts of data. They require ultra-high computational performance that goes beyond the traditional embedded computer capabilities.

Some sophisticated sensors require a bandwidth of 40Gb/s to transfer data, not only in peak conditions, but for continuous operations. Moreover, Level 5 autonomous driving applications require constant, reliable and real-time operations while keeping latency as low as possible.

Storage capacity

Autonomous driving applications largely exceed the storage capacity of typical embedded computing devices. Thinking about the 40Gb/s bandwidth mentioned above, it translates to almost 20TB in only an hour of operations.

Ruggedness

High Performance Embedded Computing (HPEC) systems and data loggers installed on vehicles must provide reliable, continuous operations for long period of time. They must operate in very harsh environments: withstanding shocks, vibrations, dust and wide temperature ranges.

Certifications

Embedded and electronic systems and Edge computers installed into vehicles must comply with industry standards. Automotive certifications, such as E-Mark and IEC 60068-2-6 / 60068-2-27 for shock and vibration are objective ways for characterizing the behavior of the system under stress in actual operating conditions.

Compactness

Space is more than often at a premium in embedded applications. Systems designed to fit into embedded environments must come with compact size, to be easily installed into vehicles.

However, HPEC systems provide tremendous amount of computational power and they easily heat up. Dissipating such an intense heat would require a proper and powerful cooling system that can be easily installed in the vehicle.

Cooling

High Performance Computing systems are typically bulkier than embedded systems due to heat dissipation issues: they are usually equipped with big fans that cannot be used in embedded applications where performance is sacrificed to adapt to space constraints.

Eurotech has a lot of expertise in designing liquid-cooled HPC (High Performance Computing) and HPEC systems. Liquid cooling is an ideal solution for HPEC systems in Autonomous Driving, as most of the cars are already equipped with liquid cooling systems.

Compared to air cooling, liquid cooling allows more computational density and a better energy efficiency: even though Eurotech’s HPEC systems can use up to 500W, the coolant would maintain a temperature of around 41-43°C.

Bringing data center-class performance into vehicles

In the past 25 years, Eurotech has offered HPC (High Performance Computing) and HPEC (High Performance Embedded Computing) solutions to customers seeking cutting edge performance and reliability. The latest product portfolio delivers a complete set of devices to allow building sophisticated and resilient computational architectures in the Field.

Edge training and inference: bringing AI to the Edge

The DynaCOR 50-35 is an HPEC server based on dual Intel Xeon E5-2600 CPUs and up to 2x NVIDIA GTX 1070 GPU cards that Enable Edge AI applications. The combination of CPUs and CPUs enable the processing of deep learning algorithms to allow inference and training of AI models directly in the field. This enables in-vehicle systems to autonomously provide responses to enable Level 5 autonomous driving.

Unprecedented data logging capacity

The DynaCOR 40-34 brings unprecedented networking storage capabilities with up to to 2x 7.68TB NVMe SSD and 2x40/56GbE ports. Powered by the Intel Xeon E3 CPU family, it features 32GB of soldered-down RAM and one 256GB SATA SSD.

The DynaCOR 40-35 features up to 123TB raw capacity and 2x100GbE ports, for a sustained data transfer of up to 6GByte/s; the system supports advanced RAID configurations to provide extra performance and data protection. Powered by the Intel Xeon D2100 CPU family with up to 16 cores and 3.00 GHz of turbo clock speed, it features 64GB of soldered-down ECC DDR4-2400 MT/s and one 512GB NVMe for the system image and user applications.

The DynaCOR 40-34 and DynaCOR 40-35 feature an optional vehicle Docking Station: systems can be quickly swapped to make data instantly available for off-vehicle processing.

All DynaCOR systems (including the DynaCOR 50-35) are largely configurable and more configuration options are available through Eurotech Professional Services: the system’s active midplane provides up to 96 PCIe lanes for additional GPUs, NVMe, high-speed networking cards and specialized modules, such as vehicle bus interfaces, high speed frame grabbers and more.

Extreme networking performance with HPEC switches

The DynaNET 10G-01 features 52 GbE ports for a total 176Gb/s switching capacity: the ports can be stacked to further increase the port count, or can be connected to a 40Gb/s backbone. It represents the ideal solution for applications where a large number of devices and sensors such as LIDARs, RADARs and high definition cameras – typically found in autonomous vehicles - need to be reliably connected.

The DynaNET 100G-01 is an ideal fit for applications where extreme networking performance, reliability and compactness are required, such as Autonomous Driving, Artificial Vision and HPEC. It features a total throughput of 3.2Tbs, a processing capacity of 2.38Bpps and 300ns port-to-port latency, delivering significant benefits in terms of data traffic predictability at very low latency. It provides a 16x 40/56/100GbE ports backbone infrastructure that can be split up to 32x 50GbE or 64x10/25GbE to increase the port count.

Both the DynaNET 10G-01 and the DynaNET 100G-01 feature Layer 3 switching, allowing great control over traffic and a more deterministic management of services and data streams. This will benefit all those applications where it is necessary to avoid data starvation and preserve the deterministic behavior of the network.

Deep Learning Segmentation & Classification Libraries

Optimized for analyzing images in machine vision applications

Artificial Intelligence

Euresys released EasySegment, a new Deep Learning library that works in unsupervised mode. Prior, it is trained with “good” images only, thus, it can detect and segment anomalies and defects in new images. EasySegment operates with any image resolution, supports data augmentation and masks, and is compatible with CPU and GPU processing. EasySegment complements EasyClassify as Open eVision’s Deep Learning libraries.

The software libraries come with the free Deep Learning Studio application as part of the Deep Learning Bundle

As a classification tool, EasyClassify is able to detect defective products and sort them into various classes. It supports data augmentation and works with only one hundred training images per class. Both software is easy to use and come with the free Deep Learning Studio application, which enables the users to evaluate the capabilities of the libraries, create and manage datasets and supervise the training process.

AI Embedded Edge Computer

Optimized for deep and machine learning

Industry 4.0, Artificial Intelligence

Syslogic offers a wide portfolio of Embedded Box PC, with various models available to fit all industrial requirements. Among those, the AI Embedded System (Edge Computer) is built upon a Jetson TX2 module by Nvidia with a quad-core processor platform that combines two computation modules with Denver 2 microarchitecture with four Cortex-A57 cores and a Pascal GPU.

Robustness and continuous operation

This module is specifically optimized for real-time use of artificial intelligence, thanks to the 256 shader core of the Pascal GPU. The design is robust and does not feature moving, which makes the product maintenance-free and prolongates its availability. It can operate in temperatures ranging from -40°C to +70°C.

It suits applications in the field of machine vision, intelligent control, deep learning and machine learning. The intended markets are industrial automation, Automated Guided Vehicle (AGV), traffic control, and cleantech.

OpenCL AI Platform

Helps build custom engines for compute-intensive workloads

Electronics & Electricity, Artificial Intelligence

Arrow Electronics simplifies AI implementation into high-performance computing with a new platform ready to run on the BittWare 385A FPGA accelerator card. The software modules edited by Arrow are suitable for popular AI workloads, supplied pre-loaded on powerful, configurable heterogeneous compute engine and available through Arrow Testdrive™. Created in OpenCL, these modules help users build custom engines in applications such as image processing and facial recognition. The new AI platform comprises a compiler to let users create their own applications if desired.

Easy access to the AI platform through the Testdrive™ program, which lets users evaluate development tools

The modules are provided preloaded on the accelerator card and currently include vector addition, FFT and 2-dimensional FFT, edge detection, file transcoding, face detection, and Sobel edge detection. New functions to extend support and accelerate adoption are created continuously. The BittWare 385A includes the Intel Arria 10 1150 GX FPGA, connected to the host via PCI Gen 3 x8 and to two 40Gb Ethernet QSFP+ optical interfaces for off-board communication. The accelerator card contains two banks of 4-16GB DDR3 capable of up to 2133 MTransfer/s.

Explore the Future Hub at Hannover Messe 2020

Future-proofing the industrial sector

Artificial Intelligence, Industry 4.0

Hannover Messe is coming back in 2020, over 70 years after its foundation in Hannover in 1947.

For its 72nd edition in 2020, the Hannover Messe will be centered around the lead theme of the “Industrial Transformation” and explore how innovation, technological disruption, changing buyer behavior and a heightened awareness of climate and sustainability requirements contribute to reshaping the industrial sector.

The optimized layout and clearer thematic structure will facilitate the Hannover Messe experience, be it from the perspective of a visitor or an exhibitor.

Future Hub

Located in Hall 24 and 25, the Future Hub will be the one stop shop for all topics concerning innovation culture and the future of the workplace and the plant.

Through a programmatic focus on Research & Development, the display is especially dedicated to startups, new technologies, and Work 4.0. The Future Hub will work as a platform to facilitate knowledge transfer between industrial expertise and academic research, resulting in the production of innovative solutions for the requirements of tomorrow’s industrial sector.

Among the exhibitors there will be present various technical academic institutions such as Uni Freiburg and Universität Stuttgart as well as federal actors (Upper Austrian Research, FZI Forschungszentrum Informatik, Innovationsland Niedersachsen), and commercial enterprises (ETO MAGNETIC, Innovation Lab). The Future Hub will also host the tech transfer-Forum and Matchmaking as well as the tech-transfer HERMES AWARD, which will reward innovative exhibitors for their products and solutions.

Staying Competitive

Thanks to the variety of exhibitors present, the Future Hub will provide inspiration on how to ride the wave of the digital revolution in a way that makes sense for each specific business and industrial sector. Year after year, the Hannover Messe accompanies and fosters transformation through topical themed displays and events that reflect current trends in the industry.

Dr Jochen Köckler, Chairman of the Board at Deutsche Messe, stated, “Under the heading ‘Industrial Transformation’, Hannover Messe will reassert its role as a springboard to a networked, automated and globally oriented industrial base. In Hannover our visitors will find all the technologies and partners they need to remain competitive in the future”

Register here to get your free ticket to Hannover Messe 2020.

For more info hannovermesse.de/en/

Smart Cities Market Valued at US$ 900 Bn

Security solutions and the Asia Pacific region are expected to register the highest growth rates

Artificial Intelligence, Industry 4.0

According to a global market study conducted by Persistence Market Research, the market of smart cities is forecasted to grow by 18% CAGR, totaling in value an amount that surpasses US$ 900 Bn, in the period between 2019 and 2029.

As of 2018, the key commercial players are Cisco Systems, AT&T, Microsoft Corporation, IBM Corporation, Ericsson, Siemens AG, Schneider Electric, Huawei Technologies, General Electric, and Signify. Together they have a combined 45% to 50% of the total market share in terms of value.

Among the principal enabling factors for the adoption of smart city technology, public-private partnerships between governments and businesses allow to invest capital and accelerate growth, while the integration between cloud, mobile, Big Data technology, and Artificial Intelligence establishes a connected environment, ready for the implementation of smart city initiatives.

Challenges and opportunities

There are still factors that hinder an even geographical adoption of smart city technologies: the lack of existing infrastructures and the high costs of replacing them with smart infrastructures make the Middle East and Africa region and Latin America lag behind North America, which still the dominating region. It currently captures over 33% of the market share.

Due to its growing population, high rates of digital technology adoption, and high demand for smart solutions, the Asia Pacific region appears to show great potential for growth within the same forecast period (2019-2029).

Moreover, in the field of smart applications, security solutions are forecasted to grow by 22% during the same period.

Information is Power

Our reader Bent Mathiesen, IT expert and passionate about AI, gives his opinion about the risks of an improper use of AI to concentrate power, fight wars and create super powerful technologies out of our control

Artificial Intelligence

Lots of benefits come with AI and many smart people are working hard to achieve challenging goals to improve our society. But AI is also a powerful technology and the way we use/misuse it could put our present and future in great danger.

Immediate dangers

AI has been deployed everywhere. Some people understand the implication of that, but many unfortunately don’t. Governments and big companies have gathered a great amount of information on people and the whole world. For those in power, AI has already been used to change the political and economic landscape. 1984, George Orwell. People give their information away. Each day they are monitored everywhere. It is fine when it’s meant to create smart cities and optimize traffic flow. But already now, corrupted people in power can use such information to weaken opponents and eliminate them.

The world can’t agree on a common political, economic and social direction. And now AI is deployed in these conflicts, and the work behind the scene is fierce. AI has been used in the stock market for a long time, and now it is massively part of companies’ marketing strategy to get people’s attention and convince them to buy and provide personal information. People fear for their job, and there is a reason for it. The introduction of intelligent machines is a threat for employees who risk losing their job and identity, fueling the ongoing system.

Fighting wars with AI

For a long time, governments have been working intensively on new war capabilities. We have seen 100% automated drones, but many new weapons are already in the pipeline. Information is power. Gathering information for warfare is not new, but fully automatic weapons,

attacking you through the network infrastructure, or small automatic physical weapons are no longer sci-fi.

The present and the future of AI

Creating a smart AI is certainly possible, and a super-smart AI is also feasible. Different models of human-like AI have been already developed, but they are far from being human. Already now, Narrow AI is driving all apps and websites. Some groups of people work on the ethical aspect of AI and how to make it secure. But the train has left!

Some believe that strong AI will solve our problems. Are we really sure? Already today, very smart people are warning us, but they are

ignored. If you think the world is complicated with 8 billion people, imagine how it will be with millions or billions of General Artificial Intelligence (GAI) developing their own skills depending on the input and path they take…

Bent Mathiesen

UK Engineering Companies Are Not Taking Advantage of R&D Funding

Up to £10 billions may go unclaimed

Artificial Intelligence, Industry 4.0

MPA commissioned a study for the Advanced Engineering Show to investigate the current situation in the UK regarding engineering companies and R&D funding. The results showed that 21% engineering firms actively engaged in innovation are not taking advantage of the UK government’s R&D Tax Credit scheme. This existing tax scheme allows companies to claim back up to 33p for every £1 spent on R&D. Since there are over 100,000 engineering companies in the UK and each one invests on average £386,000 per year on R&D, the hypothetical sum of unclaimed funding would amount to over £10.2 billion.

Spreading awareness

Among the reasons listed by participants for not applying, the most common answer was that engineers did not believe that their companies were eligible for such funding (10% of respondents), even though 67% of workers perceived their firm as innovation active. Some of the qualifying activities would be the improvement of processes in terms of efficiency, quality or performance, the resolution of unplanned technical difficulties, or the creation of bespoke solutions for clients.

The main finding was that almost a quarter of the surveyed engineers (21%) were not aware of the very existence of the scheme. Even among the companies perceived as innovation active by their own employees, a substantial 7% did not know about the scheme.

Together with ACCIONA, Ennomotive Launched I’MNOVATION

Within the open innovation initiative, the proposals will focus on digital solutions in Chile

Artificial Intelligence, Automation, Industry 4.0

ACCIONA, a multinational company dedicated to providing sustainable infrastructures and renewable energy, and ennomotive launched the I’MNOVATION initiative in Chile, designed to encourage startups to develop innovative digital solutions.

Specifically, three are the sectoral challenges of interest outlined by ACCIONA: the mining sector, renewable energies, and smart cities.

Innovating mining, energy, and urban logistics

The deadline for submittal of proposals is October 28th.

The best proposal for every challenge will be awarded US$ 25.000 to conduct a three-month pilot in Chile. ACCIONA will also take into consideration the possibility of scaling up the startups’ solutions in a national and international context.

When Machine Learning Meets the Real World

FPGAs offer the configurability needed for real-time machine-learning inference, with the flexibility to adapt to future workloads. Making these advantages accessible to data-scientists and developers calls for tools that are comprehensive and easy to use

Artificial Intelligence

Introduction: Real-time Machine-Learning Inference

Machine learning is the force behind new services that leverage natural voice interaction and image recognition to deliver seamless social media or call-center experiences. Moreover, with their ability to identify patterns or exceptions in vast quantities of data related to large numbers of variables, trained deep-learning neural networks are also transforming the way we go about scientific research, financial planning, running smart-cities, programming industrial robots, and delivering digital business transformation through services such as digital twin and predictive maintenance.

Whether the trained networks are deployed for inference in the Cloud or in embedded systems at the network edge, most users’ expectations call for deterministic throughput and low latency. Achieving both simultaneously, within practicable size and power constraints, requires an efficient, massively parallel compute engine at the heart of a system architected to move data efficiently in and out. This requires features such as a flexible memory hierarchy and adaptable high-bandwidth interconnects.

Contrasting with these demands, the GPU-based engines typically used for training neural networks – which takes time and many teraFLOPS of compute cycles – have rigid interconnect structures and memory hierarchy that are not well suited to real-time inference. Problems such as data replication, cache misses, and blocking commonly occur. A more flexible and scalable architecture is needed to achieve satisfactory inferencing performance.

Leading Projects Leverage Configurability

Field Programmable Gate Arrays (FPGAs) that integrate optimized compute tiles, distributed local memory, and adaptable, non-blocking shared interconnects can overcome the traditional limitations to ensure deterministic throughput and low latency. Indeed, as machine-learning workloads become more demanding, cutting-edge machine-learning projects such as Microsoft’s Project BrainWave are using FPGAs to execute real-time calculations cost-effectively and with extremely low latency that has proved to be unachievable using GPUs.

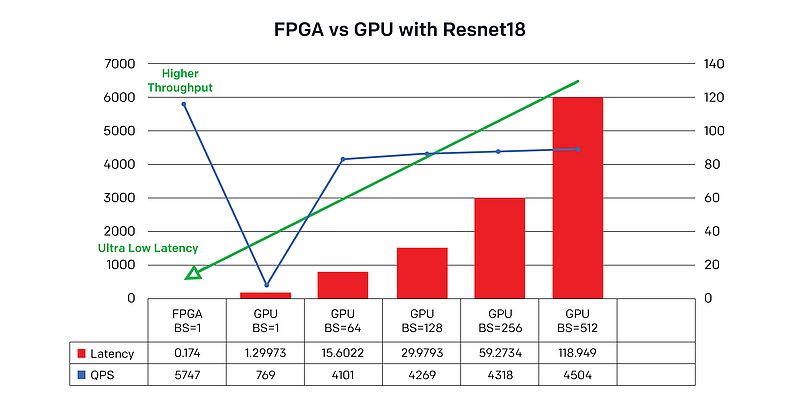

Another advanced machine-learning project, by global compute-services provider Alibaba Cloud, chose FPGAs as the foundation to build a Deep Learning Processor (DLP) for image recognition and analysis. FPGAs enabled the DLP to achieve simultaneous low latency and high performance that the company’s Infrastructure Service Group believes could not have been realized using GPUs. Figure 1 shows results from the team’s analysis with a ResNet-18 deep residual network that shows how the FPGA-based DLP achieves latency of just 0.174 seconds: 86% faster than a comparable GPU case. Throughput measured in Queries Per Second (QPS) is more than seven times higher.

Because machine-learning frameworks tend to generate neural networks based on 32-bit floating-point arithmetic, ML-Suite contains a quantizer tool that converts it to a fixed-point equivalent that is better suited to being implemented in an FPGA. The quantizer is part of a set of middleware, compilation and optimization tools, and a runtime, collectively called xfDNN, which ensure the neural network delivers the best possible performance in FPGA silicon.

The ecosystem also leverages Xilinx’s acquisition of DeePhi Technology by utilizing the DeePhi pruner to remove near-zero weights and compress and simplify network layers. The DeePhi pruner has been shown to increase neural network speed by a factor of 10 and significantly reduce system power consumption without harming overall performance and accuracy.

Reconfigurable Compute for Future Flexibility

In addition to the challenges associated with ensuring the required inferencing performance, developers deploying machine learning must also bear in mind that the entire technological landscape around machine learning and artificial intelligence is changing rapidly; today’s state-of-the-art neural networks could be quickly superseded by newer, faster networks that may not fit well with legacy hardware architectures.

At present, commercial machine-learning applications tend to be focused on image handling and object or feature recognition, which are best handled using convolutional neural networks. This could change in the future as developers leverage the power of machine learning to accelerate tasks such as sorting through strings or analyzing unconnected data.

FPGAs are known to provide the performance acceleration and future flexibility that machine-learning practitioners need; not only to build high-performing and efficient inference engines for immediate deployment, but also to adapt with the rapid changes in both the technology and market demands for machine learning. The challenge is to make the architectural advantages of FPGAs accessible to machine-learning specialists and at the same time help ensure the best performing and most efficient implementation.

Xilinx’s ecosystem has combined state-of-the-art FPGA tools with convenient APIs to let developers take full advantage of the silicon without having to learn the finer points of FPGA design.

By Daniel Eaton, Senior Manager, Strategic Marketing Development at Xilinx

Combining Deep learning and Machine Vision for Next Generation Inspection

Machine vision and deep learning can give companies a powerful technology to maximize investments. Catching the differences between traditional machine vision and deep learning, and understanding how these technologies complement each other is fundamental

Artificial Intelligence

Over the last decade, technology changes and improvement have been so much various: device mobility… big data… artificial intelligence (AI)… internet-of-things… robotics… blockchain… 3D printing… machine vision… In all these domains, novel things came out of R&D-labs to improve our daily lives.

Engineers like to adopt and adapt technologies to their tough environment and constraints. Strategically planning for the adoption and leveraging of some or all these technologies will be crucial in the manufacturing industry.

Let’s focus here on AI, and specifically deep learning-based image analysis or example-based machine vision. Combined with traditional rule-based machine vision, it can help robotic assemblers identify the correct parts, help detect if a part was present or missing or assembled improperly on the product, and more quickly determine if those were problems. And this can be done with high precision.

How does deep learning complement machine vision?

A machine vision system relies on a digital sensor placed inside an industrial camera with specific optics. It acquires images. Those images are fed to a PC. Specialized software processes, analyzes, measures various characteristics for decision making. Machine vision systems perform reliably with consistent and well-manufactured parts. They operate via step-by-step filtering and rule-based algorithms.

On a production line, a rule-based machine vision system can inspect hundreds, or even thousands, of parts per minute with high accuracy. It’s more cost-effective than human inspection. The output of that visual data is based on a programmatic, rule-based approach to solving inspection problems.

On a factory floor, traditional rule-based machine vision is ideal for: guidance (position, orientation…), identification (barcodes, data-matrix codes, marks, characters…), gauging (comparison of distances with specified values…), inspection (flaws and other problems such as missing safety-seal, broken part…).

Rule-based machine vision is great with a known set of variables: Is a part present or absent? Exactly how far apart is this object from that one? Where does this robot need to pick up this part? These jobs are easy to deploy on the assembly line in a controlled environment. But what happens when things aren’t so clear cut?

This is where deep learning enters the game:

- Solve vision applications too difficult to program with rule-based algorithms.

- Handle confusing backgrounds and variations in part appearance.

- Maintain applications and re-train with new image data on the factory floor.

- Adapt to new examples without re-programming core networks.

Inspecting visually similar parts with complex surface textures and variations in appearance are serious challenges for traditional rule-based machine vision systems. “Functional” defaults, which affect a utility, are almost always rejected, but “cosmetic” anomalies may not be, depending upon the manufacturer’s needs and preference. And even more: these defects are difficult for a traditional machine vision system to distinguish between.

Deep learning’s benefits for industrial manufacturing

Rule-based machine vision and deep learning-based image analysis are a complement to each other instead of an either/or choice when adopting next generation factory automation tools. In some applications, like measurement, rule-based machine vision will still be the preferred and cost-effective choice. For complex inspections involving wide deviation and unpredictable defects—too numerous and complicated to program and maintain within a traditional machine vision system— deep learning-based tools offer an excellent alternative.

AI Convention Europe and IoT & Mobility: Facts and Figures

The two conferences running in parallel at Event Lounge in Brussels presented a rich lineup of multiple topics to address the latest challenges in AI and IoT, confirming the quality of the program and the selected audience

The coupled conferences for AI and IoT professionals – AI Convention Europe and IoT & Mobility – took place on Thursday 3rd October at Event Lounge in Brussels. For the first time, the organizers TIMGlobal Media – publisher of the European B2B industrial magazine IEN Europe – and Mark-Com Event have highlighted the strong link existing today between AI and IoT by running the two events in parallel.

With more than 200 pre-registrations and almost 150 qualified participants, AI Convention Europe and IoT & Mobility presented a rich lineup of 16 speakers who took the floor during the day, giving an overview of AI and IoT from different angles.

The conferences were opened by Sara Ibrahim, Field Editor IEN Europe Network, and moderated by Maryse Colson, Chief Marketing Officer at digazu, for AI Convention Europe, and Marc Husquinet, journalist specialized in IT, for IoT & Mobility.

The impact of AI on interpreting ''X'' data

AI and the value of trust, smart factory, the impact of AI on the society of the future, AI and gender equality, growing sales with AI – these are just some of the topics presented at the convention to give the audience the broadest perspective possible on different AI scenarios and use cases. ‘’AI will change everything in 10 years’ time.’’ said Patrice Latinne, Partner at Ernst & Young Belgium, in his opening speech entitled How do you teach AI the value of trust? Which cultural and technical attributes are practically required along the development lifecycle of AI systems.

Customer experience is at the center of the evolution of AI. ‘’We are in an experience economy, and it’s fundamental to capture and to interpret in the right way the experiences that we deliver as companies to our customers, employees, business partners, suppliers. Today the brand is the experience and the experience makes the brand. So it’s all about how we correlate all the ‘’X’’ data, the experience data, with the operational data. This is possible by evolving to an intelligent enterprise.’’ said Kenneth Stevens, Global Head of Platform & Technologies Presales at SAP.

5G leading the digital transformation

The growth in Internet energy consumption, connectivity, 5G and the digital transformation were at the center of the IoT & Mobility conference. ‘’The growth in Internet energy consumption is impacting IoT.’’ declared David Bol, Assistant Professor at the Catholic University of Louvain, in his opening session questioning IoT and sustainability.

‘’5G will transform industrial manufacturing. If we talk about 5G, we talk about connectivity, digital transformation and, substantially, about how companies must innovate to improve productivity. 5G is at the heart of the company of the future. We conducted a global study on this topic that demonstrates that 44% of the companies are not happy with their connectivity and 75% consider 5G one of the key technologies that will matter for them in the future.’’ Frederic Vander Sande, Strategy & Transformation Expert at Capgemini, explained.

Sponsors and partners

AI Convention Europe and IoT & Mobility want to thank all the sponsors and partners that made the events possible: KappaData, UCL Louvain, Capgemini, SAP, timi, Ernst & Young, icity.brussels, Agoria, AI4Belgium, ercogener, Hitachi, bedigital.brussels, software.brussels, Semtech, digital.security.

AI Convention Europe and IoT & Mobility will come back next year in October 22, 2020. Don’t miss the next edition!

For more information: ai-convention.com and iot-mobility.com

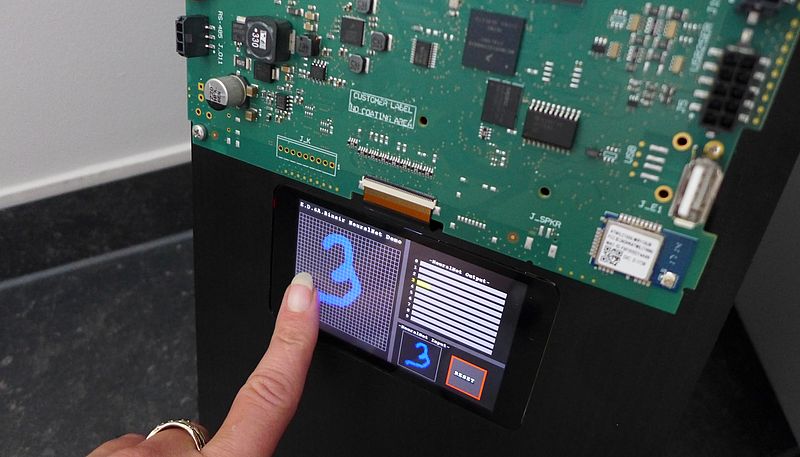

AI Implementation through Neural Networks

From pattern recognition to smart systems

Artificial Intelligence

Since neural networks (including binary ones) are promising at recognizing patterns, they can be a fitted solution for dealing with problems thinking out of the box. E.D.&A. investigated how well a neural network can be trained, for example in hob ventilation in which the electronic controller is operated using capacitive touch sensors. These sensors are located under a thick sheet of glass and in addition there is moisture, dirt and interference from the induction hob itself. Thus, E.D.&A. trained the neural network to recognize the right signal (switch on) among the others. The training examples required were obtained by using an artificial finger, which operated the sensor using compressed air.

Prediction against actual behavior

The collection of data could be automated. In addition, E.D.&A. also evaluated the binary variant of neural networks for use with its electronic controllers (microcontrollers). The electronics manufacturer implemented a demo of its own embedded AI framework for machine and device manufacturers. It operated by drawing a number from 0 to 9 on a touchscreen and in the background, it is passed on to a binary neural network. This binary neural network works out the number you mean and shows the result. These examples show us how we can make machines and devices “smart” by training them with an artificial neural network.

TIMGlobal Media BV

Rue de Stalle 140 - 3ième étage - 1180 Uccle - Belgium

o.erenberk@tim-europe.com - www.ien.eu

- Editorial Director:Orhan Erenberko.erenberk@tim-europe.com

- Editor:Kay Petermannk.Petermann@tim-europe.com

- Editorial Support / Energy Efficiency:Flavio Steinbachf.steinbach@tim-europe.com

- Associate Publisher:Marco Marangonim.marangoni@tim-europe.com

- Production & Order Administration:Francesca Lorinif.lorini@tim-europe.com

- Website & Newsletter:Marco Prinarim.prinari@tim-europe.com

- Marketing Manager:Marco Prinarim.prinari@tim-europe.com

- President:Orhan Erenberko.erenberk@tim-europe.com

Advertising Sales

Tel: +41 41 850 44 24

Tel: +32-(0)11-224397

Fax: +32-(0)11-224397

Tel: +33 1 842 00 300

Tel: +49-(0)9771-1779007

Tel: +39-02-7030 0088

Turkey

Tel: +90 (0) 212 366 02 76

Tel: +44 (0)79 70 61 29 95

John Murphy

Tel: +1 616 682 4790

Fax: +1 616 682 4791

Incom Co. Ltd

Tel: +81-(0)3-3260-7871

Fax: +81-(0)3-3260-7833