Quick access

News: 5G Commercial Development & Frugal AI2 News: Eurotech & IBM3 AI Research4 Application: Smart Sensors5 Article: The value of engineering in critical times6 Interview: Bonfiglioli7 Article: Blockchain and Machine-as-a-Service8 Focus: Spindle Control with AI9 High-end CPU & Robot Controller10 Visual Inspection, Server and Embedded PC11 IoT Products & Solutions12 New Cobot MELFA ASSISTA13 Index14 Contacts15Companies in this issue

12 ADLink Technology GmbH10 Analog Devices GmbH5 AnotherBrain2 Axiomtek Co. Ltd. (UK)11 Bonfiglioli Riduttori Spa7 Ericsson AB4 EuroTech SpA3 Exoligent12 ICP Deutschland GmbH11 Karlsruhe Institute of Technology - Institute for Anthropomatics and Robotics (IAR)9 Mitsubishi Electric Europe B.V13 Nidec Leroy Somer12Samsung and Xilinx Team Up for Worldwide 5G Commercial Deployments

Samsung Electronics will use the Xilinx® Versal™ adaptive compute acceleration platform to increase 5G performance and accelerate its leadership position in the global market

Artificial Intelligence, Industry 4.0

Xilinx has recently announced that the Xilinx® Versal™ adaptive compute acceleration platform (ACAP) will be utilized by Samsung Electronics for worldwide 5G commercial deployments. Xilinx Versal ACAPs provide a universal, flexible and scalable platform that can address multiple operator requirements across multiple geographies.

“Samsung has been working closely with Xilinx, paving the way for enhancing our 5G technical leadership and opening up a new era in 5G,” said Jaeho Jeon, executive vice president and head of R&D, Networks business, Samsung Electronics. “Taking a step further by applying Xilinx’s new advanced platform to our solutions, we expect to increase 5G performance and accelerate our leadership position in the global market.”

Operating at the heart of 5G

Versal ACAP – a highly-integrated, multicore, heterogeneous compute platform – operates at the heart of 5G to perform the complex, real-time signal processing, including the sophisticated beamforming techniques used to increase network capacity. With 5G infrastructure requirements and industry specifications still evolving, there is a need for the adaptive compute Xilinx is known for.

5G requires beamforming, which allows multiple data streams to be transmitted simultaneously to multiple users using the same spectrum. This is what enables the dramatic increase in 5G network capacity. Beamforming technology, however, requires significant compute density and advanced high-speed connectivity – on-chip and off-chip – to meet 5G’s low-latency requirements. Adding to this, different system-functional partition requirements and algorithm implementations lead to a wide range of processing performance and compute precision. It is extremely challenging for traditional FPGAs to optimally address this requirement while meeting thermal and system footprint constraints.

Real-time, low-latency signal processing

Versal ACAPs offer exceptional compute density at low power consumption to perform the real-time, low-latency signal processing demanded by beamforming algorithms. The AI Engines, which are part of the Versal AI Core series, are comprised of a tiled array of vector processors, making them ideal for implementing the required mathematical functions offering high compute density, advanced connectivity, as well as the ability to be reprogrammed and reconfigured even after deployment.

“Samsung is a trailblazer when it comes to 5G innovation and we are excited to play an essential role in its 5G commercial deployments,” said Liam Madden, executive vice president and general manager, Wired and Wireless Group, Xilinx. “Versal ACAPs will provide Samsung with the superior signal processing performance and adaptability needed to deliver an exceptional 5G experience to its customers now and into the future.”

The first Versal ACAP devices have been shipping to early access customers and will be generally available in the Q4 2020 timeframe.

AnotherBrain Accelerates its Development in 2020 to Deliver New AI Solutions Based on New Approaches

Singular approach: an AI that is self-learning and frugal in data and energy

Artificial Intelligence

In this uncertain period when everything is being challenged, singular approaches can bring new faster, more efficient and truly intelligent solutions. AnotherBrain doubles its efforts to bring its technology to the market. All the spotlights are focused on AI, which has a crucial role to play in taking up the challenges of tomorrow.

AnotherBrain develops a new generation of artificial intelligence, thanks to a singular approach. Close to how the human brain works, their technology goes beyond current mainstream AI, is self-learning and frugal in data and energy.

Delivered today as a software, the technology will soon be available on an AI chip, as a frugal and efficient ASIC. AnotherBrain is quickly expanding, with deep European roots, including its respect for privacy and focus on human-friendly explainable AI. This year, AnotherBrain marks a major turning point in its development in order to deploy its singular proprietary technology. With the conclusion of its Series A fundraising in March and its entry into the French Tech 120 program dedicated to the most promising French startups, AnotherBrain is taking actions to maximize its chances to succeed.

AnotherBrain, laureate of the French Tech 120

In 2020, AnotherBrain is joining the first FT120 promotion, a prestigious flagship program, initiative of the French government for growth-stage companies with strong potential for global leadership. The French Tech 120 label offers an unprecedented support to the French hyper-growth young companies to accelerate their development and to make them international leaders. The French and European digital sovereignty is more than ever a challenge and necessity in order to be able to create opportunities and be independent. AnotherBrain, the only FT120 company working on a proprietary AI, brings a solution and it's a real asset to meet this challenge.

Recruitments to foster AnotherBrain’s growth

The company reinforces its R&D & industrial deployment teams, including to roll out existing AI solutions for industrial automation, out of which Quality Control for Industry 4.0, smart city, automotive and IoT markets. AnotherBrain has already signed contracts with Fortune 100 companies acting on those fields.“Cards are shuffled. The global tornado we find ourselves in will generate important changes, such as reindustrialization in high salary countries, needs for new materials, new molecules, more intelligent solutions…This is why we decided to take those changes as opportunities. To be up to it, we must get prepared now. Expectations towards artificial intelligence are very high. The world needs more than ever a real efficient AI to adapt to new paradigms. AnotherBrain has a powerful and very singular technology which may help bringing solutions where other cannot. Thus, we decided to use our technology to see if we may be of any help in molecule synthesis. Humbly but proactively: we dedicated a bright team to this goal. Finally, in these troubled times companies have to be careful with expenses, and we are; but when confinement will end up, activity will rebound, and we have to be ready. For all those reasons, we’re still looking for bright people." said Bruno Maisonnier, founder & CEO of AnotherBrain.

AnotherBrain aims to double its workforce, to reach up to 65 employees by the end of the year.

EuroTech enters IBM Edge Ecosystem

Edge analytics derived from IBM Cloud Pak for Data on Eurotech’s Rugged Edge Computers, deployed leveraging IBM's Edge Application Manager, enables AI-supported Business Decisions in the Field, far outside the Data Center

Industry 4.0, Artificial Intelligence

EuroTech, a leading provider of rugged and industrial-grade embedded boards and systems, Edge computing platforms and Internet of Things (IoT) enablement solutions, announced its participation in the IBM Edge Ecosystem (including Data & AI) from multi-cloud to Edge.

IBM’s new Edge computing and Telco network solutions powered by Red Hat technology offer an open innovation platform that brings an Ecosystem of partners together to co-create and innovate at the edge on a global scale. IBM's Cloud Pak solutions allow Enterprises and Telcos to develop new containerized solutions that can be built once deployed to run anywhere. The IBM Edge Application Manager is an autonomous management solution designed to enable AI, analytics and IoT workloads to be deployed and remotely managed, delivering real-time analysis and insight at scale and can now also be leveraged with Eurotech devices.

“The integration of IBM Cloud Pak for Data deployed onto Eurotech’s Edge Computing Hardware cansignificantly lowers the barrier of entry for customers needing to leverage computational power applications in challenging environments”. Robert Andres, Chief Strategy Officer at Eurotech

"The convergence of 5G and edge computing is set to spark new levels of innovation,” said Evaristus Mainsah, general manager, IBM Cloud Pak Ecosystem, “and this in turn can enable and fuel a broad ecosystem of providers to co-create for a growing set of edge opportunities. Our collaboration with Eurotech can bring value to our joint clients needing high-performance rugged edge systems to run edge analytics and apps. Furthermore, the combination of Eurotech's Everyware Software Framework (ESF) with the IBM Edge Application Manager enabled on Eurotech devices opens access to IoT data for data scientists and DevOps teams, enabling them to reimagine how to build, deploy and manage AI-enabled edge applications at scale."

Eurotech’s rugged, high performance computer systems are fanless and sealed compute platforms designed for harsh environments and demanding applications as diverse as transportation, including autonomous vehicles, manufacturing, collaborative robots, security & surveillance and railways. These highly reliable systems, regardless of whether they are edge computing systems, IoT gateway products or the platforms built on Eurotech’s HPC technology are passively or warm water cooled and come with a wide range of CPU, hardware acceleration (GPUs & AI), communication, GPS and storage options to address different edge computing needs.

A vision to bring OT and IT into a software defined, open standards-based ecosystem approach

Edge computing solutions, especially the IoT Gateway products are offered in combination with Eurotech’s IoT Edge Middleware ESF (Everyware Software Framework), a feature-rich IoT Edge Framework that scales from embedded Linux devices to powerful Edge servers, where ESF runs as a virtual gateway in a container. To ease the development on edge devices, ESF provides flexible device twin modelling over the leading field bus protocols across multiple vertical industries. Edge developers can leverage the rich IoT middleware functionality using powerful APIs, deploy full-fledge application running natively in the container or use ESF Wires to visually compose data pipelines for their Edge devices.

“We appreciate the possibility to extend our long lasting collaboration with IBM, joining an ecosystem to effectively enable vital enterprise technology like advanced analytics at the edge, in the world of Operational Technologies (OT)” commented Robert Andres, Chief Strategy Officer at Eurotech. “The integration of IBM Cloud Pak for Data to build and train analytics models that IBM Edge Application Manager can deploy onto Eurotech’s Edge Computing Hardware, including our High-Performance Edge Computers, significantly lowers the barrier of entry for customers that need to leverage computational power for AI and analytics applications in challenging environments”.

Eurotech and IBM believe in and share a vision to bring OT and IT into a software defined, open standards-based ecosystem approach, aiming to contribute heavily to open source and open standards.

''Artificial Intelligence Helps Simplify Telecommunications''

AI research in telecommunications: Interview with Dr. Elena Fersman, Director of Artificial Intelligence Research at Ericsson

Automation, Artificial Intelligence

What does it mean to conduct research in AI for a telecommunications company? For Dr. Elena Fersman, Director of Artificial Intelligence Research at Ericsson, it means being able to tackle relevant problems by simplifying processes and creating new algorithms. Without putting ethics aside.

IEN Europe: How long has Ericsson been conducting research in AI?

E. Fersman: Ericsson has been doing research in AI for more than ten years. When I started heading up AI research at Ericsson, five years ago, I led several teams, which then grew bigger. Now, Ericsson has a unique AI research group, made up of a lot of people working on many different technologies.

Personally, I’ve been working on AI for my entire career since university. Before being appointed as Director of Artificial Intelligence Research, I worked in different positions at Ericsson. The last few years marked a significant change in terms of AI research strategy, but it’s been two years that AI is really taking hold on a large scale in the telecommunications industry.

Ericsson has a huge portfolio of products and services, but in the last two years it has become more and more AI-centric. We are preparing all the software architectures to make them ready for AI and many other exciting projects are on the way.

IEN Europe: What does it mean to conduct research in AI for a company like Ericsson?

E. Fersman: AI research has completely changed over the last ten years. Ten years ago, we didn’t have so much data, or data was there but we didn’t use it for our AI applications. Now we have even too much data and researching in this type of environment is very exciting. At the beginning, you can use simple methods and analytics and try more learning methods. Then you go for more sophisticated methods, like vanilla machine learning methods, and you increasingly refine your research methods on the way.

In these ten years of research, we’ve always tried to advance and build upon ourselves. For me, the core of our research activity is the ability to adapt AI to our own business context. Research in AI is not a theoretical exercise. It’s always something practical, based on finding advanced problem-solving solutions and methods. Another critical point is being able to create our own algorithms.

This is a very simplified vision of how I conceive and work on AI research. I always tell my research team that we are not an organization that simply takes AI algorithms off the shelf and apply them. We develop new AI algorithms, always having the relevance in mind.

IEN Europe: What does it take to develop new algorithms?

E. Fersman: It’s really a big machine. We have a lot of researchers, and even before we start, we always work with our stakeholders, customers, and business units, to have a relevant context. Doing a theoretical exercise will not help to create a good algorithm. So it’s a constant chain of feedbacks given to us. We always ask ourselves if we are on the right track.

It also takes a mix of competences. In the academic environment, quite often you dig into one domain and then you become an expert, but this doesn’t work in the industrial setting. Here, it’s always a matter of mixing different competences, putting together people with different experiences and backgrounds.

IEN Europe: What do you mean by ''AI helps make complex things simpler''?

E. Fersman: Communication means simplification. That’s what drives a company like Ericsson. AI helps simplify telecommunications. If you are an engineer and you need to solve a problem, either you are alone without any support from the algorithms, or you do have your support system, which gives you some recommendations. This is a simplification for an engineer. We do the same for our customers, helping them make network slicing easier by using algorithms and suggestion systems. This can be applied to many contexts and it’s a real model for simplification.

IEN Europe: Which are the main application areas for AI within Ericsson?

E. Fersman: AI is spread everywhere in our business units and portfolio of products and services. We use it even internally. But we identify three main areas of deployment: 1) AI for boosting automation 2) AI to optimize Ericsson’s products and services 3) AI to power new businesses and telecoms.

Zooming in, we aim to optimize network performance, customer experience, efficient operations, virtualization and network slicing, energy efficiency, and infrastructure utilization. These are our six major blocks.

IEN Europe: How is it possible to make good use of Big Data, without forgetting the value of Small Data, for a big telecommunications company today?

E. Fersman: At Ericsson, we have a data strategy and our data lake, and we have very strong security and privacy framework. We have a lot of internal data that we can see coming from our own equipment. We use data lake infrastructures for big data, but it’s also very important to find small data. We are running operations for a lot of mobile telecom operators, translating into more than one billion subscribers in our networks. When you run such a huge operation, sometimes you need to detect anomalies or failures that can happen. Monitoring small data is therefore essential to this purpose. Ericsson is committed to dedicating part of its research activity to monitoring and finding the small data.

IEN Europe: What’s the interaction between 5G and AI?

E. Fersman: AI is just a central part of 5G in many cases, especially for signal and coverage optimization. There are algorithms in many parts of 5G network systems, but there is also an overarching strategy that controls all of them. 5G is extremely complex and cannot be optimized and simplified without AI.

IEN Europe: You mentioned once that ‘Diversity, balance, and inclusion are very important in AI’. How is it possible to develop ethical AI?

E. Fersman: I believe that ethics must come first when developing an algorithm for process optimization. If ethics is not taken care of from the beginning, the algorithm may misbehave. That’s why it’s essential to decide what we allow or don’t allow an algorithm to do at an early stage. This must be the framework.

Ericsson is committed to business ethics. We have chosen to follow the Ethical Guidelines for a Trustworthy AI from the European Union and we are complying with them. As a next step, we need to digitalize these guidelines to make them machine-readable and enforceable on algorithms.That’s what we are currently doing at Ericsson. This is a huge opportunity to ensure that every algorithm you run complies with all the ethical guidelines. We are also putting a lot of effort into explainability. When the algorithm makes a decision for you, you must be able to ask why and understand the reason of this decision. This is very important in any AI application and telecoms as well.

Sara Ibrahim

Smart Sensors: From Big Data to Smart Data with AI

By Dzianis Lukashevich, DIrector of Platforms and Solutions, Analog Devices, Inc. and Felix Sawo, CEO Knowtion

Industry 4.0, Artificial Intelligence

Industry 4.0 applications generate a huge volume of complex data—big data. The increasing number of sensors and, in general, available data sources are making the virtual view of machines, systems, and processes ever more detailed. This naturally increases the potential for generating added value along the entire value chain. At the same time, however, the question as to how exactly this value can be extracted keeps arising—after all, the systems and architectures for data processing are becoming more and more complex. Only with relevant, high quality, and useful data—smart data—can the associated economic potential be unfolded.

Challenges

Collecting all possible data and storing them in the cloud in the hopes that they will later be evaluated, analyzed, and structured is a widespread, but not particularly effective, approach to extracting value from data. The potential for generating added value from the data remains underused; finding a solution at a later time becomes more complex. A better alternative is to make considerations early on to determine what information is relevant to the application and where in the data flow the information can be extracted. Figuratively speaking, this means refining the data; that is, making smart data out of big data for the entire processing chain. A decision regarding which AI algorithms have a high probability of success for the individual processing steps can be made at the application level. This decision depends on boundary conditions such as the available data, application type, available sensor modalities, and background information about the lower level physical processes.

For the individual processing steps, correct handling and interpretation of the data are extremely important for real added value to be generated from the sensor signals. Depending on the application, it may be difficult to interpret the discrete sensor data correctly and extract the desired information. Temporal behavior often plays a role and has a direct effect on the desired information. In addition, the dependencies between multiple sensors must frequently be accounted for. For complex tasks, simple threshold values and manually determined logic or rules are no longer sufficient.

AI Algorithms

In contrast, data processing by means of AI algorithms enables the automated analysis of complex sensor data. Through this analysis, the desired information and, thus, added value are automatically arrived at from the data along the data processing chain. For model building, which is always a part of an AI algorithm, there are basically two different approaches.

One approach is modeling by means of formulas and explicit relationships between the data and the desired information. These approaches require the availability of physical background information in the form of a mathematical description. These so-called model-based approaches combine the sensor data with this background information to yield a more precise result for the desired information. The most widely known example here is the Kalman filter.

If data, but no background information that could be described in the form of mathematical equations, are available, then so-called data-driven approaches must be chosen. These algorithms extract the desired information directly from the data. They encompass the full range of machine learning methods, including linear regression, neural networks, random forest, and hidden Markov models.

Selection of an AI method often depends on the existing knowledge about the application. If extensive specialized knowledge is available, AI plays a more supporting role and the algorithms used are quite rudimentary. If no expert knowledge exists, the AI algorithms used are much more complex. In many cases, it is the application that defines the hardware and, through this, the limitations for AI algorithms.

Embedded, Edge, or Cloud Implementation

The overall data processing chain with all the algorithms needed in each individual step must be implemented in such a way that the highest possible added value can be generated. Implementation usually occurs at the overall level—from the small sensor with limited computing resources through gateways and edge computers to large cloud computers. It is clear that the algorithms should not only be implemented at one level. Rather, it is typically more advantageous to implement the algorithms as close as possible to the sensor. By doing so, the data are compressed and refined at an early stage and communication and storage costs are reduced. In addition, through early extraction of the essential information from the data, development of global algorithms at the higher levels is less complex. In most cases, algorithms from the streaming analytics area are also useful for avoiding unnecessary storage of data and, thus, high data transfer and storage costs. These algorithms use each data point only once; that is, the complete information is extracted directly, and the data do not need to be stored.

Embedded Platform with AI Algorithms

The ARM® Cortex®-M4F processor-based microcontroller ADuCM4050 from ADI is a power saving, integrated microcontroller system with integrated power management, as well as analog and digital peripheral devices for data acquisition, processing, control, and connectivity. All of this makes it a good candidate for local data processing and early refinement of data with state-of-the-art smart AI algorithms.

The EV-COG-AD4050LZ is an ultralow power development and evaluation platform for ADI’s complete sensor, microcontroller, and HF transceiver portfolio. The EV-GEAR-MEMS1Z shield was mainly, but not only, designed for evaluation of various MEMS technologies from ADI; for example, the ADXL35x series, including the ADXL355, used in this shield offers superior vibration rectification, long-term repeatability, and low noise performance in a small form factor. The combination of EV-COG-AD4050LZ and EV-GEAR-MEMS1Z can be used for entry into the world of structural health and machine condition monitoring based on vibration, noise, and temperature analysis. Other sensors can also be connected to the COG platform as required so that the AI methods used can deliver a better estimate of the current situation through so-called multisensor data fusion. In this way, various operating and fault conditions can be classified with better granularity and higher probability. Through smart signal processing on the COG platform, big data becomes smart data locally, making it only necessary for the data relevant to the application case to be sent to the edge or the cloud.

The COG platform contains additional shields for wireless communications. For example, the EV-COG-SMARTMESH1Z combines high reliability and robustness as well as extremely low power consumption with a 6LoWPAN and 802.15.4e communication protocol that addresses a large number of industrial applications. The SmartMesh® IP network is composed of a highly scalable, self-forming multihop mesh of wireless nodes that collect and relay data. A network manager monitors and manages the network performance and security and exchanges data with a host application.

Especially for wireless, battery-operated condition monitoring systems, embedded AI can realize the full added value. Local conversion of sensor data to smart data by the AI algorithms embedded in the ADuCM4050 results in lower data flow and consequently less power consumption than is the case with direct transmission of sensor data to the edge or the cloud.

Applications

The COG development platform, including the AI algorithms developed for it, has a very wide range of applications in the field of monitoring of machines, systems, structures, and processes that extend from simple detection of anomalies to complex fault diagnostics. Through the integrated accelerometers, microphone, and temperature sensor, this enables, for example, monitoring of vibrations and noise from diverse industrial machines and systems. Process states, bearing or stator damage, failure of the control electronics, and even unknown changes in system behavior, for example, due to damage to the electronics, can be detected by embedded AI. If a predictive model is available for certain damages, these damages can even be predicted locally. Through this, maintenance measures can be taken at an early stage and thus unnecessary damage-based failure can be avoided. If no predictive model exists, the COG platform can also help subject matter experts successively learn the behavior of a machine and over time derive a comprehensive model of the machine for predictive maintenance.

Conclusion

Ideally, through corresponding local data analysis, embedded AI algorithms should be able to decide which sensors are relevant for the respective application and which algorithm is the best one for it. This means smart scalability of the platform. At present, it is still the subject matter expert who must find the best algorithm for the respective application, even though the AI algorithms used by us can already be scaled with minimal implementation effort for various applications for machine condition monitoring.

Embedded AI should also make a decision regarding the quality of the data and, if it is inadequate, find and make the optimal settings for the sensors and the entire signal processing. If several different sensor modalities are used for the fusion, the disadvantages of certain sensors and methods can be compensated for by using an AI algorithm. Through this, data quality and system reliability are increased. If a sensor is classified as not or not very relevant to the respective application by the AI algorithm, its data flow can be accordingly throttled.

The open COG platform from ADI contains a freely available software development kit and numerous example projects for hardware and software for accelerating prototype creation, facilitating development, and realizing original ideas. Through the multisensor data fusion (EV-GEAR-MEMS1Z) and embedded AI (EV-COG-AD4050LZ), a robust and reliable wireless meshed network (SMARTMESH1Z) of smart sensors can be created.

About the Authors

Dzianis Lukashevich is director of platforms and solutions at Analog Devices. His focus is on megatrends, emerging technologies, complete solutions, and new business models that shape the future of industries and transform ADI business in the broad market. Dzianis Lukashevich joined ADI Sales and Marketing in Munich, Germany in 2012. He received his Ph.D. in electrical engineering from Munich University of Technology in 2005 and M.B.A. from Warwick Business School in 2016. He can be reached at dzianis.lukashevich@analog.com.

Felix Sawo received his Master of Science in mechatronics from the Technical University of Ilmenau in 2005 and his Ph.D. in computer science from the Karlsruhe Institute of Technology in 2009. Following his graduation, he worked as a scientist at Fraunhofer Institute of Optronics, System Technologies, and Image Exploitation (IOSB) and developed algorithm and systems for machine diagnosis. Since 2011 he has been working as CEO at Knowtion, which specializes in algorithm development for sensor fusion and automatic data analysis. He can be reached at felix.sawo@knowtion.de.

The Value of Engineering in Critical Times

Coronavirus has confronted us with innumerable challenges. Some of them were already on the plate and are now urgent objectives to achieve. The lack of engineers, for instance, is a reality and weighs more heavily in times of crisis

Artificial Intelligence, Automation, Covid-19, Industry 4.0

The current health crisis has raised many questions in the public and political sphere of the society on the ways to deal with it and the priorities to outline. In the Western world, we have learned a hard but important lesson: we are still not doing enough to build a more sustainable world. We realized that our investments in innovation, in different business and health models – based on digitalization, smart working, automation, AI, and telehealth – are insufficient. And, more importantly, we have understood that to make progress possible, we need to improve and consolidate people’s trust in technology, promoting sustainability, privacy protection, and transparency.

Technology is neither good nor bad. It depends on how we used it. What’s sure is that to have good technology, we need to have good scientists and engineers. The quantity and quality of talents spread reasonably all over the world can have a big impact on the future of humanity. The World Federation of Engineering Organizations (WFEO) has pointed out that the current shortage of skilled engineers put into risk the ability to achieve the Sustainable Development Goals (SDGs) set in the 2030 Agenda by the United Nations General Assembly in 2015.

''We [as WFEO] recognize the role of engineering in implementing the SDGs, but governments and the public have not yet acknowledged this crucial importance. We also realize that there are gaps between the current world engineering capacity and the requirements of the SDGs,’’ stated Prof. Gong Ke, President at the World Federation of Engineering Organizations.

The lack of engineers poses serious problems

According to ‘The State of Engineering 2019 Report’ by Engineering UK, the annual demand for skilled engineers and technicians is around 124,000, alongside a requirement for 79,000 related roles with transversal skills. The ability to meet the yearly demand for core engineering roles with high skills (level 3+) is declining. At the same time, in fast-growing economies – in Africa, Asia, and Latin America – there is an expanding demand for engineers and engineers service to build new infrastructure.

The short supply of engineers in Africa is alarming if we think that the country needs at least 2.5 million of new engineers to meet its sustainable development goals, as Martin Manuhwa, president of Federation of African Engineering Organizations (FAEO) declared. This shortage poses serious problems since engineering is crucial in supporting access to clean water and sanitation, affordable and clean energy, resilient infrastructures, economic growth and decent work, and other basic human needs, as Prof. Gong Ke explained.

The WFEO had the idea of creating the “World Engineering Day for Sustainable Development” (WED) to increase political and public recognition of the centrality of engineering and accelerate the implementation of inclusive, people-centered, and sustainable development. The Unesco General Conference proclaimed unanimously in November 2019 the 4th of March of every year ''World Engineering Day for Sustainable Development’’.

Engineering Vs. Covid-19

The first WED was supposed to be held at Unesco headquarters in Paris on the 4th of March but then it was cancelled. The WFEO immediately published a statement called ''Stepping up to the challenge of coronavirus and other global threats'' to mobilize engineers worldwide in the fight against Covid-19 and encourage them to put this urgent task at the very top of their agenda.

In particular, the statement highlighted that the contribution of engineering could significantly support the creation of special infrastructure for quarantine and treatment, tools for the quick screening and diagnosis of patients based on AI and computer vision, and effective protection equipment for the frontline workforce. Improving data analysis and big data systems was considered extremely important to refine monitoring techniques and control the spread of the virus – while ensuring the timely and reliable record, storage, and dissemination of data. This is also part of engineering’s mission and responsibility in critical times, according to WFEO.

Mutual efforts to drive the change

Building a set of regulations and framework conditions is central to the development of flourishing and sustainable engineering. Engineers alone can’t drive the change if the context is not favorable. Europe has positioned itself as the world’s main source of tech regulations and innovation and has attracted many talented scientists and engineers. However, more cooperation at an international level and the promotion of global governance is needed, especially in dealing with very tough challenges generated by not yet mastered technologies like AI and Big Data, such as promoting privacy, safety, fairness, and inclusivity. Cybersecurity was recently ranked – alongside environmental issues – amongst the daunting challenges for engineers in the second Global Engineer Survey conducted by the engineering outreach organization DiscoverE.

''It’s not an exaggeration to say that without a secure cyber environment, the world will grind to a halt,’’ said Kathy Renzetti, Executive Director at DiscoverE. After all, the protection of sensitive data, personal information, intellectual property, and governmental and industry information systems is possible only if we can rely on secure networks, and it’s also the precondition to make engineers’ work effective. Now, more than ever before, engineers, scientists, civil societies, and governments must gather force and make a joint effort to maximize the benefits of new technologies for the entire society.

Sara Ibrahim

Defining a ''Roadmap of Promising Initiatives'' for the Digital Transformation

Bonfiglioli embraced the digital transformation to be closer to its customers in the transition to Industry 4.0. Its roadmap of initiatives and projects merged into the Digital Transformation Dashboard, presented at last year’s SPS

Industry 4.0, Motors & Drives

IEN Europe: At SPS 2019, Bonfiglioli showed how the company embraced the digital transformation. Why did you decide to go in this direction?

G. Khawam: Bonfiglioli Digital journey led to many initiatives, activities and projects both for Internal processes digitalization and also for market needs.

Being an electromechanical international company operating in many sectors of activities, in many countries, it is essential for us to be with our customer during their digital transformation. Our digital transformation drivers are mainly three:

- Increase efficiency within internal processes

- Generation of unique customer experience while interacting with us

- Creation of additional value thanks to products and services in terms of digital functionalities and developing value proposition and business models

IEN Europe: How does your digital transformation dashboard work?

G. Khawam: After some inspirational tours, visits, consultancies, Bonfiglioli defined a list of activities to complete for the digital transformation.

We went through review and prioritization with 2 key dimensions: Alignment with Future Ambitions and Stage of Development. This led to define a roadmap of promising initiatives. Thanks to the adoption of Agile methodology, we were able to have rapidly some of those initiatives up and running.

During this journey, customers are also involved and participating in the initiatives. All the projects and initiatives roadmap, outcomes and deliverables are part of our Digital transformation dashboard. The dashboard is reviewed monthly during the “Business Innovation committee” in presence of company CEO and main stakeholders.

IEN Europe: How can gearboxes, drives and inverters contribute to the digital transformation?

G. Khawam: Gearboxes, Drives and Inverters are part of machines. Their functioning is critical to the machine’s uptime, to machine’s maintenance costs, to the machine performance, to the energy consumption, to safety of the machines. Machines users are looking more and more to KPI such as Total Cost of Ownership and Overall Equipment Efficiency, it is becoming increasingly important to have all those KPI optimized continuously. Optimizing those KPI becomes therefore a competitive cost advantage by reducing the OPEX. Moreover, machines safety is primordial also and working environment is to be more user-friendly.

At Bonfiglioli, we are working on providing Valuable useful information to the users of the machines based on data and signals generated by the different components of the Power transmission system.

This approach will allow users to have the most relevant information to:

- Better plan the machine maintenance

- To optimize the throughput of their machines

- To optimize the energy consumption and therefore improve its efficiency, heat dissipation, and energy costs

- To access remotely and from almost anywhere to the machine information for a higher reactivity and decision making

IEN Europe: How AI, IoT and industrial communication are impacting the motion control sector?

G. Khawam: The motion control sector can and will benefit a lot from new technologies. From one side the actual products are expected to be “digitally enhanced” to allow the extraction of additional data, from the other side the infrastructure around the products is expected to open communication channels and to add computational intelligence to elaborate the data into meaningful information for users. This will lead to more advanced and innovative services.

Model based estimation and Artificial Intelligence will be the core technology to deploy and deliver such innovative services in the most effective and efficient way for any Bonfiglioli products. The IIoT platform plays also a key role to support the new service business model. Such information will be valuable to improve the products and to maximize their quality.

IEN Europe: Energy efficiency is still a big topic for Bonfiglioli and you demonstrated how efficient can be your motors in combination with your drives. Which step forward can be further expected?

A. Chinello: Together with our synchronous reluctance motor series BSR (IE4 efficiency class), our R&D team has developed and released the frequency inverter software to control this type of motor in the most efficient and performant way, resulting in a Power Drive System which meets and exceeds the requirements for the IES2 efficiency classification. Such software control is now available in the Active Cube frequency inverter series and we are planning to further extend it also to other inverter series.

More in general, our industrial motor offer is increasing its efficiency level thanks to new series to be soon released to the market.

A further step is about the full compliance of our frequency inverter series with the new Ecodesign directive. Compliance to IE2 inverter efficiency class is already reality, but we are targeting to make a step forward to demonstrate the margins (and additional energy benefits) we can obtain compared with the basic requirements set by the European norms.

Sara Ibrahim

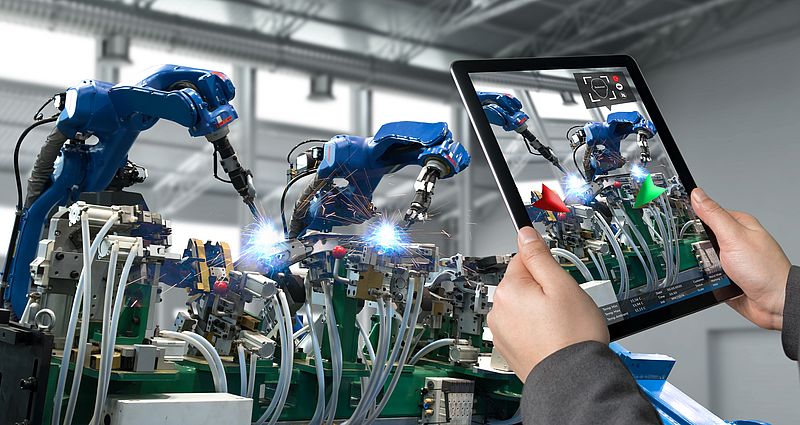

Blockchain and Machine-as-a-Service: A Viable Solution to Tackle the Crisis?

The pandemic has opened companies’ eyes to the urgency of digitalizing their factories. Making concepts likes Machine-as-a-Service possible, blockchain technology could be part of the solution

Artificial Intelligence, Automation, Covid-19, Industry 4.0

Every cloud has a silver lining. For many experts, Coronavirus is the opportunity for the industrial market to get over its fears and go for the digital transition, finally embracing it with no hesitations. The world is indeed confronted with huge evidence: We weren’t prepared at all to face such an emergency. Even the forward-looking industrial world, shining with its highfaluting acronyms – AI, IoT, AR, VR, ML –, wasn’t really prepared to it and it’s now battening down the hatches.

''Had business moved with more alacrity and determination when it had the opportunity, it would now be in a different place. The virus may now focus organizations’ minds on the need to automate faster in the medium term and will accelerate an investment in factory automation when the global economy eventually rebounds,’’ said David Bicknell, Principal Analyst in the Thematic Research Team at data and analytics company GlobalData.

Better late than never, but now the time to act has come. Among the plethora of technologies that could foster a quick rebound of the manufacturing industry, there is also blockchain. Being an immutable, decentralized database managed by a cluster of separated computers within a ''democratic’’ system, blockchain has become the ideal solution to distribute digital information in a secure way.

This system can be used not only to create digital currency and bitcoin, but it can be harnessed also in the industrial space to offer interesting services. Features like decentralization, immutability, and transparency make this technology suitable to tackle companies’ major problems.

The role of blockchain technology and machine- as-a-service

In times of crisis, problem-solving and responsiveness are golden assets. SteamChain, a startup company located in the US, has come up with a Machine-as-a-Service platform – called Secure Transaction Engine for Automated Machinery (STEAM) – that leverages the power of secure blockchain technology to create business value for OEMs and their end-users, using existing machine data.

The US company Pearson Packaging System implemented a Machine-as-a-Service model based on SteamChain’s system to allow customers to ''rent'' Pearson’s machines – they produce erectors, sealers, and compact palletizers – paying just an established price per case but without the upfront equipment investment. ''MaaS is an ideal option for companies who prefer to pay for automation incrementally, or who have an immediate need for end-of-line machinery but don’t have approved funding. By eliminating the upfront expense of machinery, manufacturers can devote their resources to other projects that improve operations or differentiate their businesses – like new product development,'' declared Pearson President and CEO, Michael Senske.

Thanks to blockchain technology, users can access data on machine output – which are stored in a secure digital database that can’t be modified – and get information regarding the status of the machine to identify trends and issues and predict maintenance operations. In times of crisis, a solution like that gives the flexibility needed with regard to investments, guaranteeing results that can be used for production optimization and automation. A practical example of a ready-to-use solution based on blockchain that can easily solve contingent problems without the need to make huge investments.

Sara Ibrahim

Camera-based Spindle Control

An AI for machine tool maintenance

Vision & Identification, Artificial Intelligence

Researchers at the Karlsruhe Institute of Technology (KIT) engineered a system for fully automated monitoring of ball screw drives in machine tools. It consists of a camera which is directly integrated into the nut of the drive generates images that an AI continuously monitors for signs of wear. The aim is to reduce machine downtime.

In mechanical engineering, maintaining, and replacing defective components timely in machine tools is an important part of the manufacturing process. In the case of ball screw drives, such as those used in lathes to precisely guide the production of cylindrical components, wear has until now been determined manually.

Wear on the spindle in ball screws can be continuously monitored and evaluated with an intelligent system by KIT

“Maintenance is therefore associated with installation work, which means the machine comes to a standstill,” says Professor Jürgen Fleischer from the Institute for Production Technology (wbk) at the Karlsruhe Institute of Technology (KIT). “Our approach, on the other hand, integrates an intelligent camera system directly into the drive, which enables a user to continuously monitor the spindle status. If there is a need for action, the system informs the user automatically.”

The new system combines a camera with light source attached to the nut of the drive and an artificial intelligence that evaluates the image data. As the nut moves on the spindle, it takes individual pictures of each spindle section, enabling the analysis of the entire spindle surface.

Artificial intelligence for mechanical engineering

Combining image data from ongoing operations with Machine Learning methods enables system users to assess directly the condition of the spindle surface. “We trained our algorithm with thousands of images so that it can now confidently distinguish between spindles with defects and those without,” says Tobias Schlagenhauf who helped develop the system. “By further evaluating the image data, we can precisely qualify and interpret wear and thus distinguish if discoloration is simply dirt or harmful pitting.” When training the AI, the team took account of all conceivable forms of visible degeneration and validated the algorithm’s functionality with new image data that the model had never seen before. The algorithm is suitable for all applications that identify image-based defects on the spindle surface and is transferrable to other applications.

Rugged COM Express Type 6 Module

Comes with Intel Xeon E3-1500 v6 for HPEC

Industry 4.0, Artificial Intelligence

The new CPU-162-24 from EuroTech is a fanless COM Express Basic (125x95mm) Type 6 module based on the Intel Xeon E3-1505L v6 and 1505M v6 processors and supports up to 2 DDR4 SO-DIMMs with ECC, for a total capacity of 32GB. It features an integrated GPU that provides hardware acceleration and up to three simultaneous video outputs.

Ideal for high performance graphics and video applications

IoT, Industrial, Transportation and Defense applications benefit from the low power consumption of this high-end CPU, which delivers fanless computing in extended operating temperature range (-40°C to +85°C). With a long-life cycle, optional conformal coating and a soldered down CPU, the CPU-162-24 is a reliable building block for fanless Edge devices and Edge servers that need to perform consistently in harsh environmental conditions.

ROS 2 Robot Controller

Developed for scalable development of AI-based robotic applications

Artificial Intelligence

ADLINK Technology launched the ROScube-I with Intel, offering a real-time ROS 2 robot controller for advanced robotic applications. The ADLINK ROScube-I Series is a ROS 2-enabled robotic controller based on Intel® Xeon® E, 9th Gen Intel® Core™ i7/i3 and 8th Gen Intel® Core™ i5 processors, and features great I/O connectivity supporting a broad choice of sensors and actuators to meet the needs of a wide range of robotic applications.

Includes a real-time middleware for communication between software components and devices

The ROScube-I supports an extension box for convenient functional and performance expansion with Intel® VPU cards and the Intel® Distribution of OpenVINO™ toolkit for computation of AI algorithms and inference. Robotic systems based on the ROScube-I are supported by ADLINK’s Neuron SDK, a platform specifically designed for the professional robotic applications such as autonomous mobile robots (AMR).

Visual Inspection with Plug & Inspect™ Technology

Plug & play vision inspection

Vision & Identification, Artificial Intelligence

Inspekto, a company specialized in the machine vision sector, has developed the technology Plug & Inspect™, the first integration-free technology for visual quality inspection, which eliminates costly integration and customized developments happening in traditional machine vision projects in industrial plants. Plug & Inspect™ runs three AI engines working in tandem, merging computer vision, deep learning and real-time software optimization technologies to achieve true plug-and-play vision inspection.

Self-learning, self-setting and self-adapting

INSPEKTO S70, is a standalone product for visual inspection that is self-learning, self-setting and self-adapting. Out-of-the-box and ready to use, it can be set up in 30 to 45 minutes, using only 20 good sample items and no defective ones. The S70 can address the whole range of mid to complex vision inspection tasks, in any product.

The iNA series from Axiomtek offers a reliable system to deliver industrial control system security, but also comes with redundancy and isolation power design to ensure non-stop operations running on legacy technologies. Among the iNA series, Axiomtek’s AI edge server is designed to optimize big data processing in a broad spectrum of environments.

A protection from malicious cyber-attacks

The iNA600 provides L2 managed switch and AI engine (GPU and CPU) support for machine vision to enhance intelligent factory interoperability. The iNA600 is equipped with a security engine to protect the user's business from malicious cyber-attacks. Its ample storage is provided with RAID to keep the confidential and sensitive data safely preserved at all times.

Compact Embedded PC with AI Computing Power

Equipped with Dual Intel MovidiusTM MyriadTM X VPU for AI Deep Learning

Artificial Intelligence

ICP Deutschland provides the ultra-compact ITG-100AI, an inference system that is prepared for use with dedicated neural network topologies (DNN). The two Intel® Movidius™ Myriad™ X VPU in the ITG-100AI deliver great inference performance per watt, 16 SHAVES cores for AI calculations and native FP16 support. On the software side, the ITG-100AI supports Intel®'s open source toolkit "Open Visual Inference Neural Network Optimization" (OpenVINO™), delivering end-to-end acceleration for a broad range of neural networks. OpenVINO™ enables CNN (convolutional neural network) based, pre-trained models to be deployed at the edge with little effort.

Ideal for Deep Learning Inference System, object detection and face recognition

Training models from Caffe or Tensorflow can be implemented quickly and easily. The ITG-100AI is also equipped with an Intel® Atom™ x5-E3930 Apollo Lake SoC and 8GB pre-installed DDR3L memory. A 128GB SATA DOM provides space for the Linux or Windows 10 operating system as well as for the OpenVINOTM Toolkit, which is available free of charge, and the training model to be used. For the connection of peripheral devices, the palm-sized embedded PC has two LAN GbE, RS-232/422/485 and USB 3.0 interfaces each. The ITG-100AI can be operated in a temperature range from -20°C to +50°C. The compact size and the mounting options offer additional flexible application scope to realize AI projects.

Created by Exoligent, this on-board system equipped with VAM FIP module sends all the frames received from the FIP bus to the Ethernet UDP network. This system is robust and suitable for railway applications. Equipment comfort data such as temperature or memory load is also sent in Ethernet frames. The frames received on the remote equipment are decoded with FIPWatcher spy bus or a dissector.

Hardware side: The TBOX - FIP is equipped with a motherboard based on Celeron or Core I7 (optional) and a VAM FIPWatcher card

The operation of the system is done via a Webserver. The system is unidirectional and transparent on the FIP network and sends the frames automatically after power up. Two other VAM FIP modules are available: copper master/slave or fiber optic master/slave, with 24- 110 V power supply.

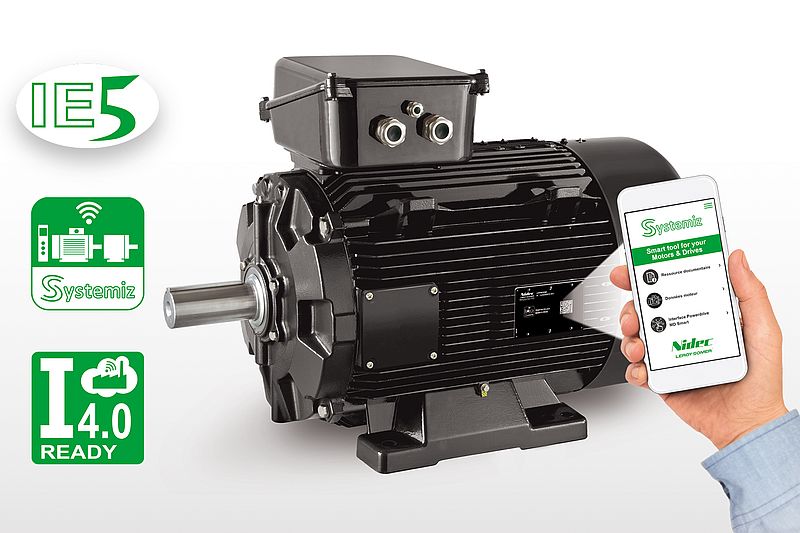

Permanent Magnet Synchronous Reluctance Motor

Connected to Systemiz application

Industry 4.0, Motors & Drives

Dyneo+ is the new super premium efficiency permanent magnet synchronous reluctance motor designed for variable speed from Nidec Leroy-Somer. It demonstrates high-end performance, while being as reliable and easy to set-up as an induction motor. The range is supported by a complete set of variable speed drives to optimize the motor performance for operation with or without position feedback.

Environmentally friendly

Dyneo+ motors are connected to the jointly developed Systemiz application, enhancing the user experience with a variety of digitalized services, including simple and interactive commissioning. Dyneo+ allows substantial energy savings and reduced emissions, offers one of the lowest TCO on the market, a very short ROI and low maintenance. It is a cost-effective and efficient drive solution for refrigeration, pumps, compressors, fans, grinders or extruders.

Easy-handling Cobot

Suitable for safe and precise support for humans

Automation, Artificial Intelligence

Mitsubishi Electric’s new collaborative robot, the MELFA ASSISTA, has been developed to work alongside human operators without the need for guards or safety fences, while meeting new requirements for adequate distancing of workers in manufacturing sites. The cobot offers maximum safety and durability combined with ease of use and programming, while maintaining very high positional repeatability.

The cobot meets the needs of both standard industrial and environmentally sensitive applications – for example, it can be supplied with certified NSF H1 grease (National Sanitation Foundation guidelines) for applications such as the food and beverage sector. It can perform complex and delicate assembly tasks, precise work holding or repetitive pick and place operations with the highest levels of consistency and reliability while responding flexibly to rapidly changing business environments and social needs. Application examples include working alongside human operators in automotive assembly tasks or performing packaging operations on production lines.

Set-up of the cobot is simplified using direct teach functionality where the user holds the arm and moves it to each required position; the position is then saved by pressing a button on the keypad built into the cobot arm. The process is both time-saving and intuitive for operators. This complements the visual programming software used for more complex operations - the RT Visualbox package allows for both drag and drop motion functions and individual adjustments to each movement. This means that costs for additional robot programming can be saved, as operators can alter set ups without specialised robot expertise.

The new cobot meets all relevant safety requirements as defined under ISO 10218-1 and ISO / TS 15066

The MELFA ASSISTA cobot has an exceptionally high repeat accuracy of ±0.03mm* by a rated payload of 5kg and reach radius of 910 mm. It enables increased product quality, which correspondingly reduces the time overhead required for quality control, ensuring higher overall quality standards. This extends the range of possible applications to include Life Sciences, precision assembly, high quality packaging or component transfer processes.

A further benefit of the MELFA ASSISTA is the ability to switch it between collaborative mode – where it operates at the slower speeds typical of a cobot – and a higher speed mode for use in a more industrial ‘cooperative production’ environment. This ensures maximum application flexibility. Fault diagnostics and operational state are also shown by a 6 colour LED ring mounted around the robot’s forearm which is always visible.

A

ADLink Technology GmbH 10 Analog Devices GmbH 5 AnotherBrain 2 Axiomtek Co. Ltd. (UK) 11B

Bonfiglioli Riduttori Spa 7E

Ericsson AB 4 EuroTech SpA 3, 10 Exoligent 12I

ICP Deutschland GmbH 11K

Karlsruhe Institute of Technology - Institute for Anthropomatics and Robotics (IAR) 9M

Mitsubishi Electric Europe B.V 13N

Nidec Leroy Somer 12TIMGlobal Media BV

Rue de Stalle 140 - 3ième étage - 1180 Uccle - Belgium

o.erenberk@tim-europe.com - www.ien.eu

- Editorial Director:Orhan Erenberko.erenberk@tim-europe.com

- Editor:Kay Petermannk.Petermann@tim-europe.com

- Editorial Support / Energy Efficiency:Flavio Steinbachf.steinbach@tim-europe.com

- Associate Publisher:Marco Marangonim.marangoni@tim-europe.com

- Production & Order Administration:Francesca Lorinif.lorini@tim-europe.com

- Website & Newsletter:Marco Prinarim.prinari@tim-europe.com

- Marketing Manager:Marco Prinarim.prinari@tim-europe.com

- President:Orhan Erenberko.erenberk@tim-europe.com

Advertising Sales

Tel: +41 41 850 44 24

Tel: +32-(0)11-224397

Fax: +32-(0)11-224397

Tel: +33 1 842 00 300

Tel: +49-(0)9771-1779007

Tel: +39-02-7030 0088

Turkey

Tel: +90 (0) 212 366 02 76

Tel: +44 (0)79 70 61 29 95

John Murphy

Tel: +1 616 682 4790

Fax: +1 616 682 4791

Incom Co. Ltd

Tel: +81-(0)3-3260-7871

Fax: +81-(0)3-3260-7833

Tel: +39(0)2-7030631